Other Parts Discussed in Thread: AM5718

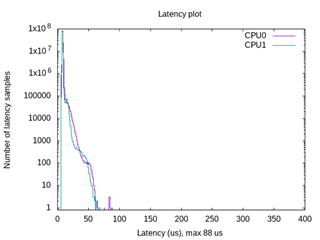

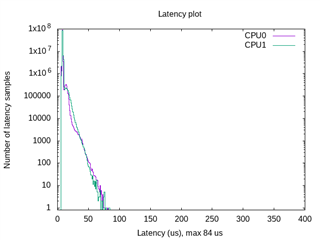

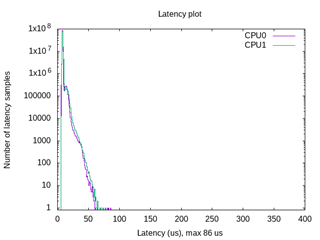

I see there is a new release of the RT-Linux SDK for AM64x - 08.00.00.21. In the former one (07.xx.xx.xx) I reported that I see quite bad latency results (cyclictest). I see that the new one has some changes in the kernel area (e.g. version is up changed from 5.4-rt to 5.10-rt).

Does TI run some kind of real-time verification/validation on the new versions of the SDK? I see some notes about LTP-DDT in the SDK documentation, but no results or any other references.

What is the goal for the Cortex-A53 cluster as real-time behavior? I understand that the Cortex-R5 cluster is quite a better match to real-time application demands, but in many cases, there are legacy applications that need to use the RT properties of the "Linux-RT" - for example, soft-PLC runtimes, NC/CNC kernels, and so on.

I will surely run the benchmarks again on the SK, but it's better to know if these kinds of applications are supported, or targeted, by TI for the AM64x series. If not then there is no warranty that in the next release there will be no regression that would now allow using this series.