Hi folks,

We have successfully configured the access from the DSP of an AM5718 to a 2MB NVRAM connected through the GPMC. We load the DSP binary from Linux with remoteproc, and the DSP binary is able to read and print the values of certain positions of the NVRAM assigning its starting address to an uint16_t pointer (no variables/arrays declared). This means, as far as we understand, that the resource table including the NVRAM (as devmem) is properly configured. Last entry of /sys/kernel/debug/remoteproc/remoteproc2/resource_table:

Entry 20 is of type devmem Device Address 0x15000000 Physical Address 0x1000000 Length 0x200000 Bytes Flags 0x0 Reserved (should be zero) [0] Name DSP_NVRAM

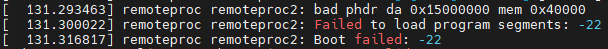

However, when we try to locate a variable/array into the NVRAM section, remoteproc fails showing a "bad phdr da 0x15000000 mem 0x40000" trace. Address 0x15000000 comes from the address of L3_MAIN map (0x14000000. See table 2-10. DSP Memory Map of the TRM) + 0x01000000 offset of the NVRAM within the GPMC (which is located in address 0x0 of L3 as stated in table 2-1. L3_MAIN Memory map).

Looking into the dts, we see that only l1pram, l1dram and l2ram are defined for the DSP, so we added the info for the nvram:

&dsp1 {

reg = <0x40800000 0x48000>,

<0x40e00000 0x8000>,

<0x40f00000 0x8000>,

<0x15000000 0x200000>;

reg-names = "l2ram", "l1pram", "l1dram", "nvram";

};

It resulted in the same error. Then, digging into de omap_remoteproc driver (kernel 4.19.94) we realized that omap_rproc_of_get_internal_memories function performs an address "translation" from addresses of L3_MAIN to addresses of the DSP:

- DSP1_L2_SRAM [0x4080 0000] in L3_MAIN memory map --> DSP_L2 [0x0080 0000] in DSP memory map.

- DSP1_L1P_SRAM [0x40E0 0000] in L3_MAIN memory map --> DSP_L1P [0x00E0 0000] in DSP memory map.

- DSP1_L1D_SRAM [0x40F0 0000] in L3_MAIN memory map --> DSP_L1D [0x00F0 0000] in DSP memory map.

Even if the implementation it's a little trickier set of operations (to support several Omaps, we guess), the result is quite like removing a 0x40000000 offset to translate from L3_MAIN address to DSP address. But we can't use the very same translation operations to get from 0x15000000 (NVRAM address for the DSP) to 0x01000000 (real address of the NVRAM within GPMC). We would need to modify the remoteproc driver in order to support our NVRAM and this makes us wonder whether it's really the proper way or if it exists a much more suitable way to do it.

We would be very grateful if anyone can tell us what we should modify to get the nvram working fully and be able to locate variables/arrays into the NVRAM section. Just DTS? omap remoteproc driver too? anywhere else? how? Should we give up trying to locate any kind of variables into a devmem and just address it through pointers?

Are we right setting NVRAM as devmem or should we change to carveout? Application Report SPRAC60 (AM57x Processor SDK Linux®: Customizing Multicore. Applications to Run on New Platforms) mentions CMA (for carveouts) is aimed to DSP and IPU application code as well as to IPC buffers, but we see no further difference with CMEM, and we are not very clear when to use CMA or CMEM. DDR goes to CMA as carveout, so does OCMC_1 too and so on. Any light on this?

Thank you very much in advance for your support