Hi,

I'm exploring the port of yolov5 object detection network on TDA4.

My starting point is (https://github.com/TexasInstruments/edgeai-yolov5). I trained this network on a pedestrian dataset.

My first question is there a preferred runtime between onnx, tflite and TVM to move it to the TDA4?

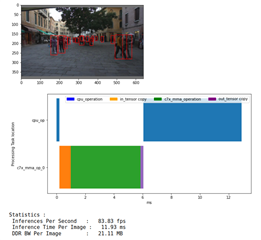

Nevertheless, I tried the onnx runtime and then moved it to https://dev.ti.com/edgeai/ . I then modified the Custom model onnx runtime jupiter notebook in order to try it. I get the following results:

Detection are ok but one can see that half of the inference time was spent on the cpu. I guess there are some layers that are not supported by the mma? if so, is there a way to determine which ones in order to replace them with supported layers if possible? Would it be different if I use the tflite runtime?

Thanks in advance,

Cheers,

Jerome