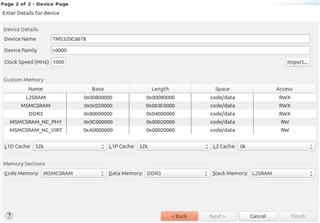

This question is about a performance difference when changing the CPU clock speed in the platform package (see below) from 1000 MHz to 1400 MHz.

I do have a larger piece of code with a parallel routine that runs on 8 cores. When I set the clock speed to 1000 MHz, I measure using "_itoll(TSCH, TSCL)" 10,000 clock cycles for the parallel routine. When I set the clock speed to 1400 MHz, I measure 30,000 clock cycles.

- Is such a performance difference expected?

- Why does this happen?

- Is it possible to recover the 10,000 clock cycles when running the CPU at 1400 MHz?

Thank you very much for your help and comments.