Hi Everyone,

While debugging our custom NN import to TIDL we experienced faulty TIDL execution for asymmetric resize layers. We ran the model and dumped the tensor values as float from with our own ONNX PC execution and compared them with the TIDL PC emulation in float mode for the same input. We calculated the differences from the reference tensor values and found that asymmetric resize layers produce wrong outputs in TIDL.

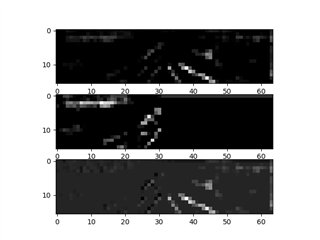

Running the PC float emulation, the asymmetric resize is executed in a symmetric way, and the asymmetric component is replaced with zero padding. In the picture below you can see the output of the first asymmetric resize layer in our network (asymmetry is 2-1 in width). Top tensor is the correct value taken from our ONNX run, middle tensor is the TIDL execution output, bottom tensor is the difference plotted as n image. We think this is a bug with asymmetric resize implementation in TIDL.

Thanks,

Marton

Importer environment:

Ubuntu 18.04 LTS

SDK: 08_00_00_12