Hi,

Problem: FPS of resnet50 on TIDL too low

Environment used:

Edgeai-tidl-tools and torchvision-ti

The above git repos were installed in separate docker environments.

Reproduction steps:

1) We have used torchvision-ti environment to generate the onnx format. We got the resnet model using the following line of code

This was used to convert to onnx format.

2) We used code from edgeai-tidl-tools/examples/jupyter_notebooks/custom-model-onnx.ipynb to convert the model from onnx to TIDL.

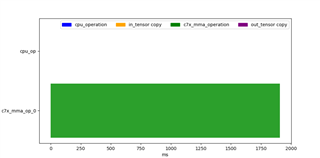

Noticing the fps stats from that code, it shows that the resnet50 takes around 0.5 fps.

I believe that this needs to be higher. Could you let me know if there are any steps I am missing? Maybe there are specific steps or version that I need to use?

Thank You

Niranjan