I have been using this part of the TIDL user guide as a reference, even though our setup uses the TFLite delegate import scripts.

While standalone reshape layers are not supported in TIDL, it seems as though reshape/flatten layers are supported when they occur before certain other layers, and they get merged together.

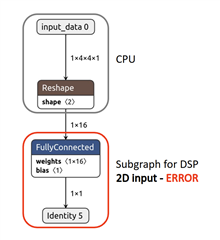

For example, this sample flatten + FC TFLite model imports without errors:

and I can see that reshape is listed as a supported TIDL layer type:

Supported TIDL layer type --- 39 Tflite layer type --- 22 layer output name--- TEST/dense/Tensordot/Reshape;TEST/reshape/Reshape Supported TIDL layer type --- 6 Tflite layer type --- 9 layer output name--- Identity Number of subgraphs:1 , 2 nodes delegated out of 2 nodes In TIDL_tfliteRtImportInit subgraph_id=5 Layer 0, subgraph id 5, name=Identity Layer 1, subgraph id 5, name=input_data In TIDL_tfliteRtImportNode TIDL Layer type 39 Tflite builtin code type 22 In TIDL_tfliteRtImportNode TIDL Layer type 6 Tflite builtin code type 9 In TIDL_runtimesOptimizeNet: LayerIndex = 4, dataIndex = 3 ************** Frame index 1 : Running float import ************* ... **************************************************** ** ALL MODEL CHECK PASSED ** ****************************************************

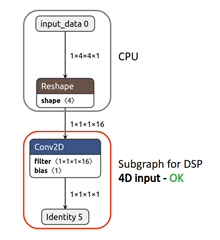

But this sample reshape + conv2D TFLite model imports with the error detailed below (despite reshape still being listed as a supported layer):

Supported TIDL layer type --- 39 Tflite layer type --- 22 layer output name--- TEST/reshape/Reshape Supported TIDL layer type --- 1 Tflite layer type --- 3 layer output name--- Identity Number of subgraphs:1 , 2 nodes delegated out of 2 nodes In TIDL_tfliteRtImportInit subgraph_id=5 Layer 0, subgraph id 5, name=Identity Layer 1, subgraph id 5, name=input_data In TIDL_tfliteRtImportNode TIDL Layer type 39 Tflite builtin code type 22 In TIDL_tfliteRtImportNode TIDL Layer type 1 Tflite builtin code type 3 In TIDL_runtimesOptimizeNet: LayerIndex = 4, dataIndex = 3 convParams.numInChannels Is not multiple of convParams.numGroups - Exiting

Based on this, I have several questions:

- Why is this error happening? Are TI able to reproduce this error?

- What is the full list of layers that support reshape merge and flatten merge. Are they different? Is Conv2D on it?

- Is any of this behaviour different between native TIDL-RT model import tool and TFLite delegate import?

- Is there a way we can distinguish between reshape and flatten layers using the deny_list feature of TFLite delegates, given that they use the same TFLite operator?

Sample models are attached:

sample_models.zip