- Ask a related questionWhat is a related question?A related question is a question created from another question. When the related question is created, it will be automatically linked to the original question.

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

Dear TI Team

We are testing a custom segmentation model on TDA4VM board .

For Layer/s compatibility and accuracy check, we inferred the model first on the PC Emulation mode before inferring on the target. In PC Emulation mode, we are able to infer and generate the results successfully , but in target mode we are not able to generate the required output.

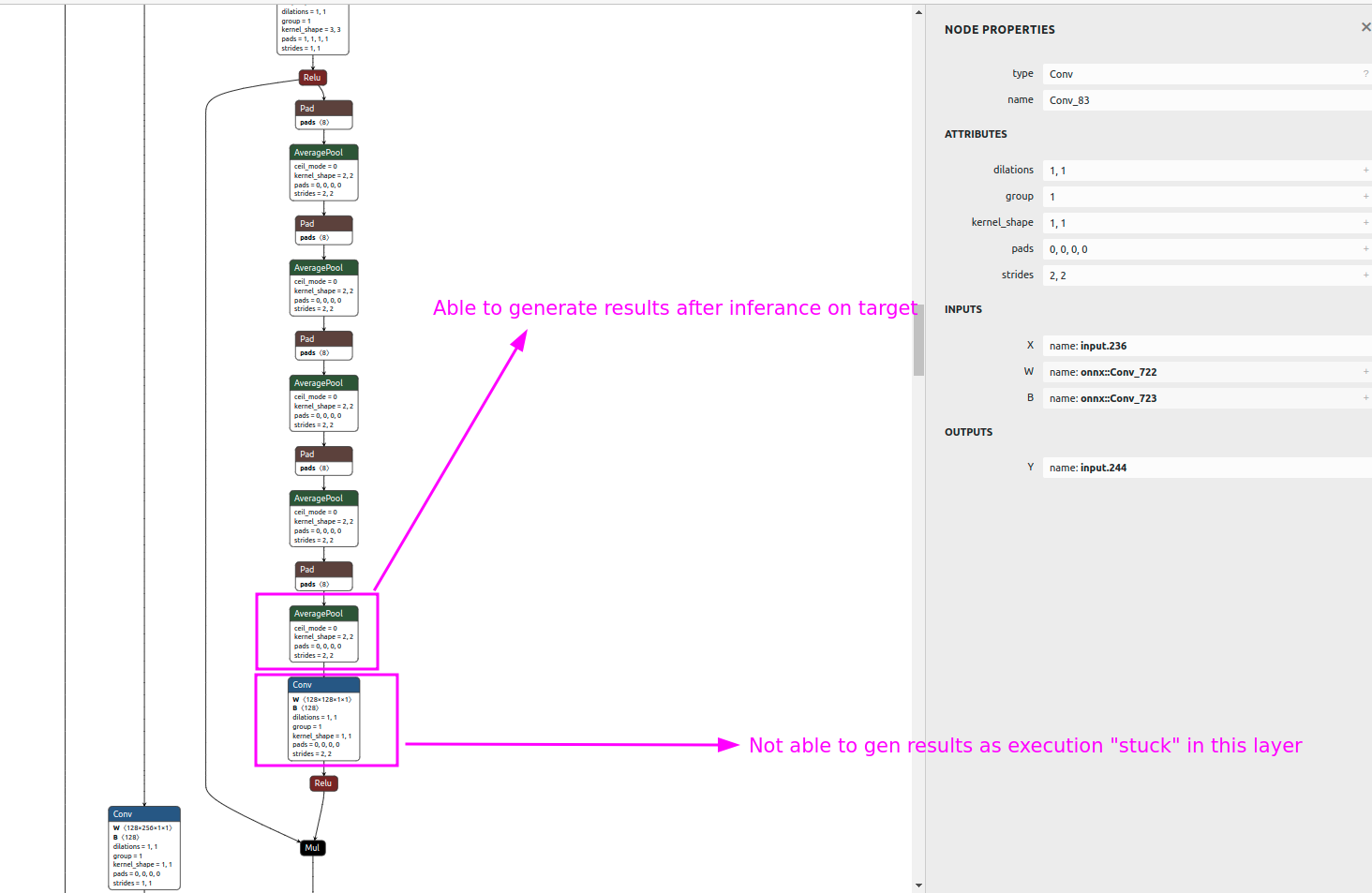

During the course of debugging , we are able to generate output until the layer "AveragePool_82 " in target and after that execution is "Stuck" in Conv_83 layer (Ref .Fig 1 - below for layer details ) .this blocks the generation of final layer output .

I have attached the model layer information where target inference is stuck and also import and inference configuration used .. Kindly help us to resolve the issue..

Fig 1: Layer at which processing is "Stuck"-

Import configuration -

modelType = 2

numParamBits = 16

numFeatureBits = 16

quantizationStyle = 3

inputNetFile = "XX.onnx"

outputNetFile = "XX.bin"

outputParamsFile = "XX_"

inWidth = 1024

inHeight = 1024

inNumChannels = 3

inFileFormat = 2

inDataFormat = 1

inElementType = 1

inDataNorm = 1

inMean = 123.675 116.28 103.53

inScale = 0.017124754 0.017507003 0.017429194

inData = "input.txt"

postProcType = 3

writeOutput = 2

debugTraceLevel = 1

writeTraceLevel = 3

Inference configuration -

inFileFormat = 2

postProcType = 3

numFrames = 1

netBinFile = XX.bin

ioConfigFile = XX.bin

inData = tinput.txt

outData = yy.bin

Versions used :-

SDK: ti-processor-sdk-rtos-j721e-evm-07_01_00_11

TVM version: REL.TIDL.J7.01.03.00.11

OS : Ubuntu 18.04

Thanks and regards

VasanthKumar.V.M

Hi Vasanth,

Any specific reason you are using such an old version of SDK? Our recommendation would be to use the latest SDK and see if the issue is still present.

Regards,

Anshu

Dear Anshu

Thanks for the Quick response.

The specific SDK is choosen based on the project requirement.

Kindly assist here to resolve the issue .

Thanks and regards

VasanthKumar.V.M

HI Vasanth,

The SDK you are using is more than a year old and many bugs have been fixed since then. Hence it is highly recommended to use the latest SDK. Do you see any challenges in moving to latest SDK?

Regards,

Anshu

Dear Anshu.

Yes.. it is a customer project requirement .

Thanks and Regards

VasanthKumar.V.M