Hi TI Experts

To implement our application, we came up with a solution: using DSS for image blending, and then output via CSI-TX.

We are faced with some problems and need help.

Application:

We are using TDA4VM to develop an Around View Monitor system.

UI overlays on AVM image. UI is developed by Qt.

UI image and AVM image are need to be blended, and

blended image is output via CSI-TX in YUV422 format.

SDK Version: 8.4

Our solution as follow:

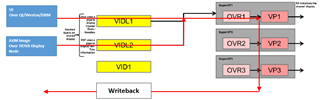

Using the overlay feature of DSS for blending, and then writeback to memory to feed data to CSI-TX.

We consider that this solution is possible in TDA4VM hardware capability, but there are some problems in software.

In software implementation, we have come up with three plans

Plan A: Sharing Display

One pipe for AVM image(over RTOS display stack), and one pipe for UI (over Linux display stack), after Overlay Manager blending, routing blended image to the writeback pipe,

and then feed it to CSI-TX.

Problem:

Sharing Display feature has been removed from SDK 7.1 Release.

We can't use it directly.

Why is Sharing Display feature removed?

Is it possible to porting Sharing Display feature from old version SDK to 8.4? How difficult is it?

Plan B: Using the Linux Display Stack

Both of AVM image and UI image over Linux display stack.

Problem1:

DSS writeback feature has not been supported in Linux from SDK 8.0 release:

We need to port it back.

Problem2:

Suppose we successfully port Linux WB, how to feed data to CSI-TX.

Learn from other thread, CSI-TX does not supported on Linux,

How to feed Linux WB data to TIOVX CSI-TX in zero copy method.

Problem3:

How to feed AVM image from TIOVX to Linux display stack, is it in zero copy method?

Plan C: Using the RTOS Display Stack

Both of AVM image and UI image over RTOS display stack.

Problem1:

Learn from other thread, TIOVX Display M2M Node does not support blending.

How to make it support blending.

Problem2:

Suppose we successfully modified TIOVX Display M2M Node to support blending,

Can Qt work on RTOS Display stack?