Part Number: TDA4VM

Other Parts Discussed in Thread: TDA4VH

Hi, expers:

On tda4vm platform, we found the performance of memcpy become lower after memset zero, test code as follow:

#include <stdio.h>

#include <pthread.h>

#include <unistd.h>

#include <sys/types.h>

#include <sys/stat.h>

#include <sys/prctl.h>

#include <sched.h>

#include <signal.h>

#include <string.h>

#include <malloc.h>

unsigned long long DEMO_TST_GetMs()

{

struct timespec time = {0};

clock_gettime(CLOCK_THREAD_CPUTIME_ID, &time);

return (unsigned long long)(time.tv_sec * 1000000 + time.tv_nsec / 1000);

}

int main()

{

int i = 0,j = 0;

unsigned long long lStart = 0;

unsigned long long lEnd = 0;

unsigned long long all = 0;

void *pa = malloc(20 * 1024 * 1024);

void *pb = malloc(20 * 1024 * 1024);

/* do memset ? */

//memset(pa, 0, 20 * 1024 * 1024);

//memset(pb, 0, 20 * 1024 * 1024);

for (j = 0; j < 100; j++)

{

lStart = DEMO_TST_GetMs();

int sum = 0;

for (i = 0; i < 100; i++)

{

sum += i;

memcpy(pa, pb, 20 * 1024 * 1024);

}

lEnd = DEMO_TST_GetMs();

all += lEnd - lStart;

//printf("%lld, %lld\n", lEnd - lStart, all / (j + 1));

}

free(pb);

free(pa);

return 0;

}

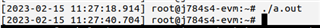

Memcpy test without memset, total consume 27 second,(0.16% cache miss of all L1-dcache accesses)

Performance counter stats for 'memcpy_noset':

27379.08 msec task-clock # 0.997 CPUs utilized

3408 context-switches # 0.124 K/sec

1 cpu-migrations # 0.000 K/sec

669 page-faults # 0.024 K/sec

54712024432 cycles # 1.998 GHz

32828016573 instructions # 0.60 insn per cycle

<not supported> branches

418668 branch-misses

39342461969 L1-dcache-loads

61556500 L1-dcache-load-misses # 0.16% of all L1-dcache accesses

26222847268 L1-dcache-stores

27.270167320 seconds time elapsed

27.090683000 seconds user

0.011870000 seconds sys

Memcpy test with memset, total consume 83 second,(0.44% cache miss of all L1-dcache accesses)

Performance counter stats for 'memcpy':

85824.02 msec task-clock # 0.997 CPUs utilized

10882 context-switches # 0.127 K/sec

0 cpu-migrations # 0.000 K/sec

677 page-faults # 0.008 K/sec

171502032179 cycles # 1.998 GHz

32956132885 instructions # 0.19 insn per cycle

<not supported> branches

1252779 branch-misses

39390732794 L1-dcache-loads

171766881 L1-dcache-load-misses # 0.44% of all L1-dcache accesses

26245423599 L1-dcache-stores

84.339231775 seconds time elapsed

83.769493000 seconds user

0.015800000 seconds sys

Thanks

quanli