Hi,

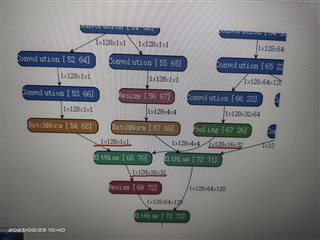

When we ran the TI_DEVICE_a72_test_dl_algo_host_rt.out with a 16bit model on the EVM(it has been infered and passed on the PC),

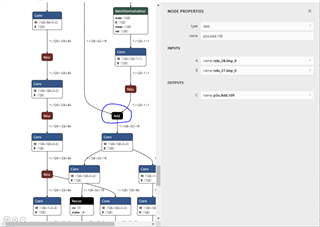

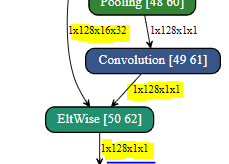

the program got stuck like the figure shown below, and we have to manually terminate it after a long-time wait.

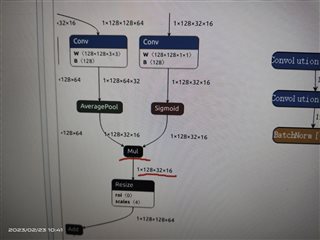

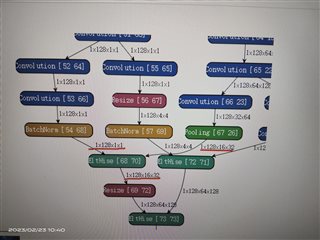

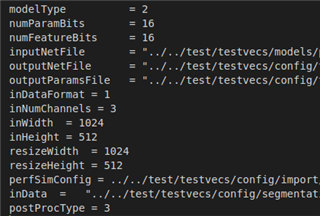

The parameters written in the import configuration file are shown in the figure below:

Questions:

1. Why is the model inference passed on the PC, but the result cannot be inferenced on the EVM?

2. Could you please take a look at our config file attached above, do we miss any parameters that cause this program-stuck problem?

3. Which parameters should be included in a config file if I want to import the model into 16bit?

Look forward to your reply!

Regards,

Kong