Other Parts Discussed in Thread: TDA4VH

hello,

I am using TDA4VH and TDA4VM boards to verify EST function. The SDK version I use is SDK8.5. The VH board uses the Native Driver mode ETH3 to send UDP packets, 80 bytes in length,use ETH0 to receive messages on the VM board for receiving.

the gate control list is

#!/bin/sh

ifconfig eth4 down

ifconfig eth1 down

ifconfig eth2 down

ifconfig eth3 down

ethtool -L eth3 tx 2

sleep 5

ethtool --set-priv-flags eth3 p0-rx-ptype-rrobin off

phc2sys -s clock_realtime -c eth3 -m -O 0 > /dev/null &

ip link set dev eth3 up

sleep 5

tc qdisc replace dev eth3 parent root handle 100 taprio \

num_tc 2 \

map 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 \

queues 1@0 1@1 \

base-time 0 \

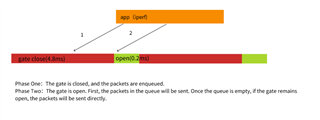

sched-entry S 2 5000 \

sched-entry S 1 40000 \

flags 2

tc qdisc add dev eth3 clsact

tc filter add dev eth3 egress protocol ip prio 1 u32 match ip dport 5003 0xffff action skbedit priority 3r

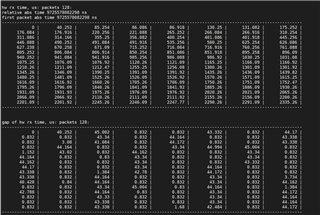

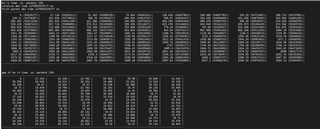

When I sends a UDP stream with Port 5003,the result is correct

But when I sends a UDP stream with Port 5000,the result is wrong

I think the 5000 port stream should be send within 5us time, rather than continuously send,but the result is not the case, I want to know why?

best regards!