Other Parts Discussed in Thread: TDA4VH, TDA4VM

Hi,

we have some questions about the inference on TDA4VH, our model can inference on TDA4VM,when we migrate to the TDA4VH,it gets some troubles. let me describe our problems.

first our inference stucked in conv inference like below

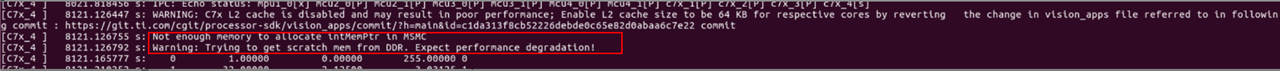

these logs show some error and warning like below.

1. What causes this error and how to fix this error about subHandle?

2.in C7x log it show 'Not enough memory to allocate intMemPtr in MSMC' and 'Warning: Try to get scratch mem from DDR, Expect performance degration', These two issues can lead to model inference stuck?

we try to increase the memory of msmc and C7x scratch,but the inference stuck still.

3. below graph shows 'Insufficient memory to backup context buffers for pre-emption', Which memory block will cause this problem? and how we fix it?