the official document was used for installation, but the following issues were encountered during the installation process:

Please refer to the attachment for installation and detailed execution steps: output_log.txt

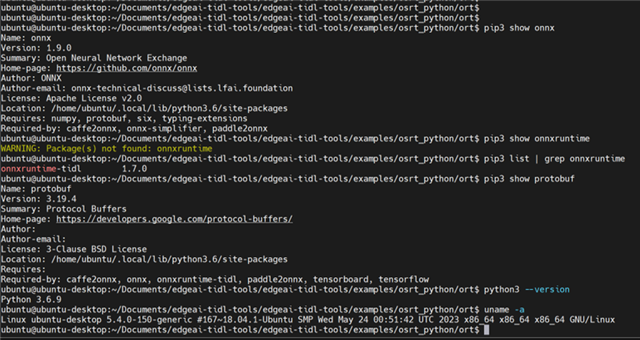

The following is our operating environment:

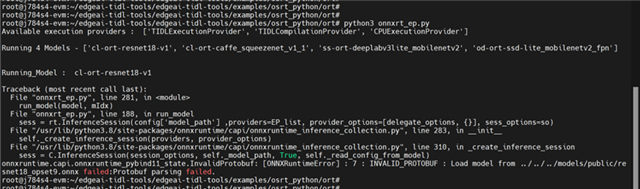

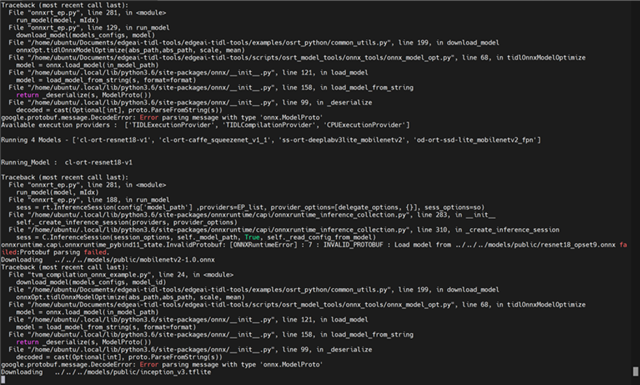

Question 1:Under edgeai-tidl-tools$ when we run source ./scripts/run_python_examples.sh :

google.protobuf.message.DecodeError: Error parsing message with type 'onnx.ModelProto'

and onnxruntime.capi.onnxruntime_pybind11_state.InvalidProtobuf: [ONNXRuntimeError] : 7 : INVALID_PROTOBUF : Load model from ../../../models/public/resnet18_opset9.onnx failed:Protobuf parsing failed.

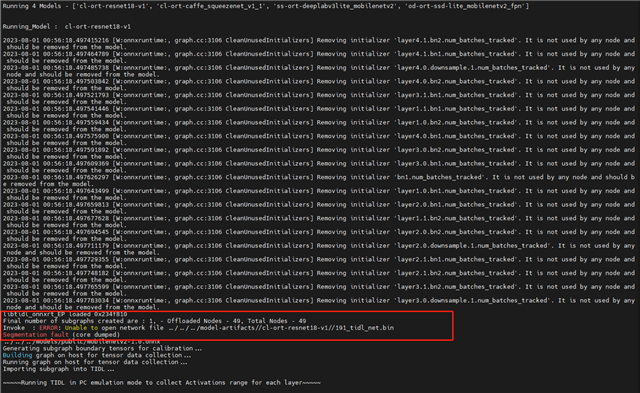

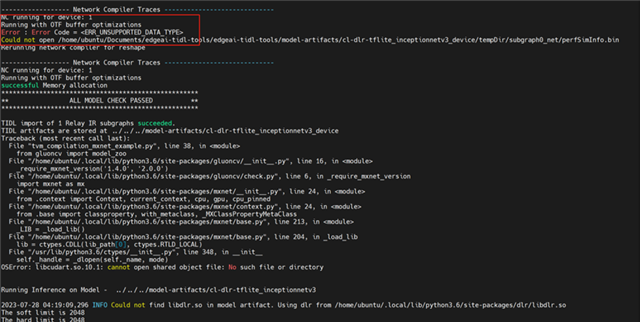

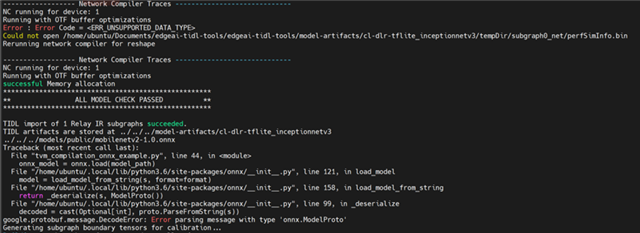

and Error : Error Code = <ERR_UNSUPPORTED_DATA_TYPE>

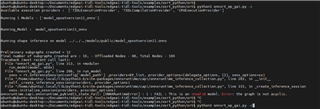

and Could not open /home/ubuntu/Documents/edgeai-tidl-tools/edgeai-tidl-tools/model-artifacts/cl-dlr-tflite_inceptionnetv3/tempDir/subgraph0_net/perfSimInfo.bin

Question 2:We copy:model-artifacts/models/to tda4VH-Q1 development board,when we run examples/osrt_python/ort/onnxrt_ep.py ,we encounter these mistake