Hello. I'm involved in the development of guitar pedals. The previous hardware platform I used was based on a specialized DSP processor and an audio codec. This allowed for audio reception, processing, and output in a sample-by-sample pipeline with minimal delay, without accumulation.

Currently, I'm working on a new hardware platform based on the AM62 SoC. For initial testing, I'm using the EVM SK-AM62 powered by Processor SDK RT-Linux for AM62x. I'm using the ALSA library for C++ for audio signal reception and output.

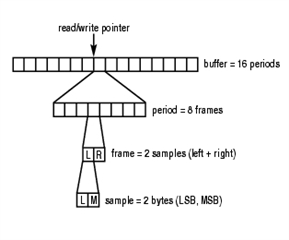

I've encountered an issue: I can't reduce the size of the input and output buffers to less than 64 samples using the ALSA API. Although, based on the documentation for the McASP interface and the TLV320AIC3106IRGZT codec, they don't have mandatory requirements for a minimum buffer size, and this limitation appears to be purely a software constraint.

Could you please advise on how to reduce the size of the input and output buffers to at least 8 samples?