Other Parts Discussed in Thread: TMDSICE3359, AM3359, TLK110

Hello all,

My customer is working with the AM3356 device and has reported the problem bellow. Before going into the detailed description, I would like to highlight following information:

- The main contact person lead engineer has been assigned to manage this post. Please make sure to communicate with him about any updates;

- TI has unfortunately not yet been able to reproduce the problem on customer side. This is where the most effort has been focused;

- Please consider the customer’s offer of sending their board to TI for further analysis in case the problem cannot be reproduced at all.

Dear customer, please fill-in any missing information you feel is lacking. I hope this clarifies the current project status and helps us work as a unit, with centralized updates for everyone.

Here is the problem description:

"We have a problem with your PRU firmware for AM3356.

We are currently in the test lab with our Profinet card. Here we get the feedback that our Profinet IRT switch in MRP mode swallows telegrams at high bus load and thus cuts off downstream components on the bus.

This is extremely unfavorable, since not always only one packet is discarded, but sometimes also several packets are missing and thus other components in the network fail.

As you already know we have developed our Profinet card with the help of the TI Third Party .

They have already looked into the problem and found out that the cause is in the PRU firmware.

The following error description has already been sent to your colleagues in India:

our customer has a PROFINET door control product that is based on AM3356 and provides either a PROFINET IRT or a PROFINET RT with MRP interface. I will name this product simply DUT. It is tested in the test laboratory of a big automotive company. It is running PROFINET RT in a MRP ring. The problem is, that PROFINET devices behind the DUT sometimes loose PROFINET connection, though the DUT is expected to just switch through cyclic data frames for all devices behind. The DUT itself does not loose connection. If the DUT is replaced by a third party device, there are no connection losses.

This automotive company is a very important customer for this product, and it is important for us to find a solution.

Topology is shown in appended PDF. MRP ring contains 5 clients. There is a stitch line using a PROFINET switch. On this stitch line, about 100 PROFINET devices are simulated using a Siemens SIMBA PNIO box. During connection setup, the cable between MRP manager an Device 3 is open, so that all MRP ring traffic and all traffic from/to the 100 simulated devices goes through the DUT. After connection setup, the MRP ring is closed (cable between MRP manager an Device 3 connected) and this installation is run for multiple hours. The cyclic frames before and after the DUT are inspected by a Siemens BANY PNIO network analyser. With this analyser, the appended PCAP file is generated. Each cyclic frame, which goes through the DUT, is logged twice, before and after the DUT, with slight time difference. This PCAP file shows a single cyclic frame loss:

- Use eth.src == 08:00:06:9D:37:BB as filter in Wireshark

- Go to frame number 124415.

This frame (presumably) was dropped by the Ethernet switch in the DUT. This is a single frame loss. Because there were connection losses on the devices behind the DUT, it is assumed, that there are cyclic frame losses of three or more cyclic frames of one device.

It is intended to have a load on the network of about 25% average, with some peaks. I have analysed the PCAP file, which is 1s long. Wireshark statistics state that in this file, load on network is about 70%. There are 160 different MAC addresses. I had a look at ICSS EMAC learning: it uses 256 buckets with 4 entries each. Using these MAC addresses and the ICSS EMAC hashing of simple bytewise XOR, I get a maximum of 3 entries per bucket. So there is no bucket overflow.

The DUT is based on:

- AM3356

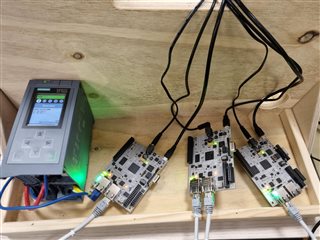

- Hardware is built quite similar to the TMDSICE3359

- DP83822 Ethernet PHY

- PRU-ICSS-Profinet_Slave_01.00.03.04

- pdk_am335x_1_0_12

Do you have an idea, what could cause these cyclic frame losses ?

Is it possible for you to reproduce this, e.g. by using a TMDSICE3359 ?

We don’t have a SIMBA simulator box, do you have one ?"

Thank you for your ongoing support and efforts.