Presently we are working with MCU+-SDK (version 08.06.00.43) and AM64xx-EVM, in particular we are concerned with Ethernet communication via PRU_ICSSG.

We have been successful to get it running with the ENET/LWIP/ICSSG application software (using ENET_ICSSG_DUALMAC mode) provided by the MCU+-SDK.

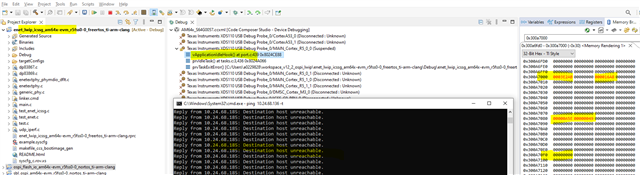

When we halt the host processor by debugger, we see the counters in the PA- and MAC-Port-ICSSG-statistics for received frames and received bytes continuing counting upwards. This is what we expect, because at first there is no reason to prevent the PRU_ICSSG from continuing to work normally.

However, the PRU_ICSSG does no longer get back free RX ressources from the halted host processor. Therefore we expect the PRU_ICSSG to discard received frames from the time when all available RX ressources are exhausted.

But actually we do not observe any PA- or MAC-Port statistics counter to indicate such an event by counting up. In particular all counters bearing 'OVERFLOW' or 'DROP' in their names remain at count zero.

Our Questions:

(1) How does the PRU_ICSSG deal with received frames after running out of RX ressources?

(2) Does the PRU_ICSSG notify the host processor in some way when such a situation has occurred? And if yes, how exactly?

Thanks a lot for your answer. Any support is highly appreciated.