1 I am adapting the latest m-core software development package with the a-core linux version 09.0000.03 and the m-core version 09_00_00_19

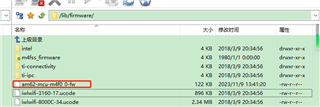

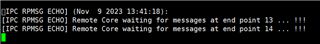

2 I am testing the ipc_rpmsg_echo_linux _ m4fss0-0_freertos example。 I compiled the m-core program with ccs, put am62-mcu-m4f0_0-fw under the / lib / firmware directory and boot to load up automatically。It works just fine

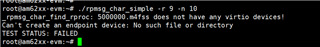

3 I compiled the a-core program ti-rpmsg-char and put it under / home / root to run the error

4 I use the TI official development board on my hardware, and A core and m core do not made any changes. Why does this phenomenon occur?