We compiled our model using SDK 8.6 and it works well for us. After switching to newer version (SDK 9.1) we encountered following issues:

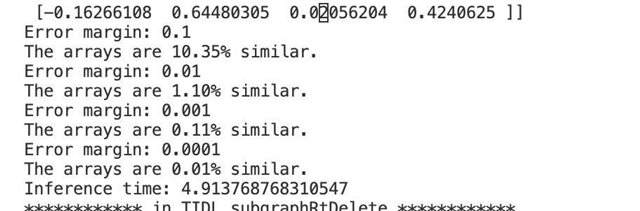

* large output mismatch after calibration comparing to ONNX model if add_data_ops: 0

* more similar values, but still large mismatch after calibration comparing to ONNX model if add_data_ops: 1

It feels like default add_data_ops: 0 not works and produce bad outputs even for simple Neural Network (only Add, Conv and Relu operations)

This model should be easily compiled using SDK 9.1 but it doesn't work in that way.

Here I provide assets to compile and readme how to run this example, inference, onnx model, logs for both variants. I believe it should work for add_data_ops: 0 too and maybe somebody could help me to determine what is wrong?