Other Parts Discussed in Thread: TDA4VM

Hi experts,

I am trying to import mobilestereonet model using edgeai-tidl-tools. However, when runing tidlOnnxModelOptimize, it returns that the model is invalid.

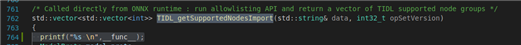

After examining the code, it seems that this function only supports single-input models:

![]()

Would you please help me if I want to import models with multiple inputs? We are working with customers who are developing stereo applications with AM62A.