Hi,

I am trying to run the efficientdet model (https://github.com/TexasInstruments/edgeai-modelzoo/blob/main/modelartifacts/AM69A/8bits/od-2150_tflitert_coco_google-automl_efficientdet_lite1_relu_tflite.tar.gz.link) as given in the model zoo. Since it is precompiled model, I tried to use as it is.

The issue I am facing is as follows-

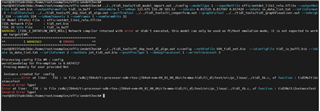

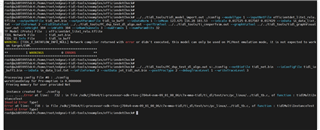

When I am trying to do inferencing using tflite python example (https://github.com/TexasInstruments/edgeai-tidl-tools/tree/master/examples/osrt_python/tfl)

- getting output inside tidl docker based env using tidl artifacts

- getting output inside tidl docker based env without tidl artifacts

- not getting output when running on device am69a using tidl artifacts

- getting output when running on device am69a without using tidl artifacts

The model is same and the artifacts are same in all the 4 conditions. Please let me know. I have not modified anything in example code.

Version : 09_01_00_05 for tidl and corresponding sdk on device

I tried to compile the model as well but same behavior.

Thanks

Akhilesh