Tool/software:

Hello,

I have followed Matlab code given in [FAQ] TDA4VM: How to create a LDC mesh LUT for fisheye distortion correction on TDA4? - Processors forum - Processors - TI E2E support forums (in python) to generate bin file.

With my camera parameters and transformation matrix.

pitch_in_mm= 0.003

f_in_mm= 3.0

w= 1920

h= 1080

s=2

m=4

tr_front = np.array([[1.29623404e+00, -3.33760860e-01, 0],

[3.44805409e-02, 2.91188168e+00, 0],

[1.24291410e-04, -1.15834076e-04, 1.00000000e+00]])

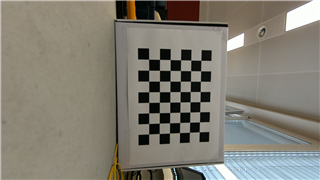

I get correct undistorted image. It is looking correct.

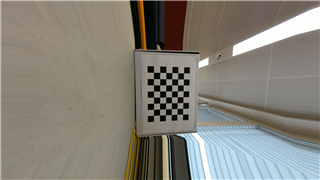

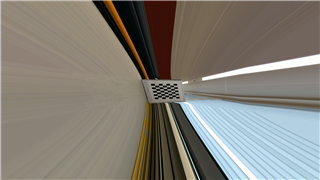

But when I multiply transformation matrix (tr_front) with xt, yt, zt. I get following image

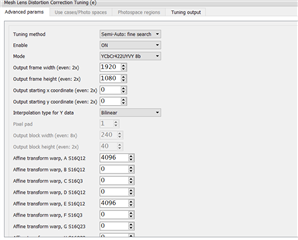

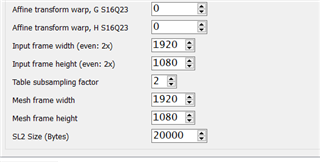

My setting in DCC tool file is looking like this

I have tried changing the values of 'm', it does not seem to make any difference. But when I reduce the value of 's' to 0.39 I get a zoomed in image.

I have also tried changing SL2 Size (bytes) value to 30,000. But it is also not making any difference.

Can you please tell me, what am I doing wrong here?

The MATLAB code is already in the link. So I am not pasting the code again. But you want to see my python code, then let me know.

Thank you very much in advanced.