Tool/software:

Hi,

Since, I can not successfully calibrate my custom onnx model with TIDL tools, I used onnxruntime quantizer to quantize and calibrate my custom model, as recommended in https://github.com/TexasInstruments/edgeai-tidl-tools/blob/master/docs/tidl_fsg_quantization.md.

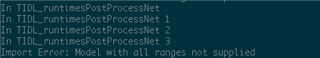

I set "advanced_options:prequantized_model" compilation parameter to 1. When I set tensor_bits to 32, I can compile model without an error, but the output is incorrect. When I set tensor_bits to 8 or 16, I can't compile model. The compilation process stops after "Import Error" message.

How should I compile model? What are proposed compilation parameters?