Tool/software:

Downloaded TI's Linux kernel from the following URL and switched to the ti-rt-linux6.6.y-cicd version, using the RT (real-time) version of the Linux kernel.

https://git.ti.com/cgit/ti-linux-kernel/ti-linux-kernel/

Using the following example code from rpmsg_char_simple.c

/*

* rpmsg_char_simple.c

*

* Simple Example application using rpmsg-char library

*

* Copyright (c) 2020 Texas Instruments Incorporated - https://www.ti.com

*

* Redistribution and use in source and binary forms, with or without

* modification, are permitted provided that the following conditions

* are met:

*

* * Redistributions of source code must retain the above copyright

* notice, this list of conditions and the following disclaimer.

*

* * Redistributions in binary form must reproduce the above copyright

* notice, this list of conditions and the following disclaimer in the

* documentation and/or other materials provided with the distribution.

*

* * Neither the name of Texas Instruments Incorporated nor the names of

* its contributors may be used to endorse or promote products derived

* from this software without specific prior written permission.

*

* THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

* "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

* LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

* A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT

* OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

* SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

* LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

* DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

* THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

* (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

* OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

*

*/

#include <sys/select.h>

#include <sys/types.h>

#include <sys/stat.h>

#include <sys/ioctl.h>

#include <stdint.h>

#include <stddef.h>

#include <fcntl.h>

#include <errno.h>

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <unistd.h>

#include <pthread.h>

#include <time.h>

#include <stdbool.h>

#include <semaphore.h>

#include <linux/rpmsg.h>

#include <ti_rpmsg_char.h>

#define NUM_ITERATIONS 100

#define REMOTE_ENDPT 14

/*

* This test can measure round-trip latencies up to 20 ms

* Latencies measured in microseconds (us)

*/

#define LATENCY_RANGE 20000

int send_msg(int fd, char *msg, int len)

{

int ret = 0;

ret = write(fd, msg, len);

if (ret < 0) {

perror("Can't write to rpmsg endpt device\n");

return -1;

}

return ret;

}

int recv_msg(int fd, int len, char *reply_msg, int *reply_len)

{

int ret = 0;

/* Note: len should be max length of response expected */

ret = read(fd, reply_msg, len);

if (ret < 0) {

perror("Can't read from rpmsg endpt device\n");

return -1;

} else {

*reply_len = ret;

}

return 0;

}

/* single thread communicating with a single endpoint */

int rpmsg_char_ping(int rproc_id, char *dev_name, unsigned int local_endpt, unsigned int remote_endpt,

int num_msgs)

{

int ret = 0;

int i = 0;

int packet_len;

char eptdev_name[64] = { 0 };

/*

* Each RPMsg packet can have up to 496 bytes of data:

* 512 bytes total - 16 byte header = 496

*/

char packet_buf[496] = { 0 };

rpmsg_char_dev_t *rcdev;

int flags = 0;

struct timespec ts_current;

struct timespec ts_end;

/*

* Variables used for latency benchmarks

*/

struct timespec ts_start_test;

struct timespec ts_end_test;

/* latency measured in us */

int latency = 0;

int latencies[LATENCY_RANGE] = {0};

int latency_worst_case = 0;

double latency_average = 0; /* try double, since long long might have overflowed w/ 1Billion+ iterations */

FILE *file_ptr;

/*

* Open the remote rpmsg device identified by dev_name and bind the

* device to a local end-point used for receiving messages from

* remote processor

*/

sprintf(eptdev_name, "rpmsg-char-%d-%d", rproc_id, getpid());

rcdev = rpmsg_char_open(rproc_id, dev_name, local_endpt, remote_endpt,

eptdev_name, flags);

if (!rcdev) {

perror("Can't create an endpoint device");

return -EPERM;

}

printf("Created endpt device %s, fd = %d port = %d\n", eptdev_name,

rcdev->fd, rcdev->endpt);

printf("Exchanging %d messages with rpmsg device %s on rproc id %d ...\n\n",

num_msgs, eptdev_name, rproc_id);

clock_gettime(CLOCK_MONOTONIC, &ts_start_test);

for (i = 0; i < num_msgs; i++) {

memset(packet_buf, 0, sizeof(packet_buf));

/* minimum test: send 1 byte */

sprintf(packet_buf, "h");

/* "normal" test: do the hello message */

//sprintf(packet_buf, "hello there %d!", i);

/* maximum test: send 496 bytes */

//sprintf(packet_buf, "0123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345");

packet_len = strlen(packet_buf);

/* double-check: is packet_len changing, or fixed at 496? */

//printf("packet_len = %d\n", packet_len);

/* remove prints to speed up the test execution time */

//printf("Sending message #%d: %s\n", i, packet_buf);

clock_gettime(CLOCK_MONOTONIC, &ts_current);

ret = send_msg(rcdev->fd, (char *)packet_buf, packet_len);

if (ret < 0) {

printf("send_msg failed for iteration %d, ret = %d\n", i, ret);

goto out;

}

if (ret != packet_len) {

printf("bytes written does not match send request, ret = %d, packet_len = %d\n",

i, ret);

goto out;

}

ret = recv_msg(rcdev->fd, packet_len, (char *)packet_buf, &packet_len);

clock_gettime(CLOCK_MONOTONIC, &ts_end);

if (ret < 0) {

printf("recv_msg failed for iteration %d, ret = %d\n", i, ret);

goto out;

}

/* latency measured in usec */

latency = (ts_end.tv_nsec - ts_current.tv_nsec) / 1000;

/* if latency is greater than LATENCY_RANGE, throw an error and exit */

if (latency > LATENCY_RANGE) {

printf("latency is too large to be recorded: %d usec\n", latency);

goto out;

}

/* increment the counter for that specific latency measurement */

latencies[latency]++;

/* remove prints to speed up the test execution time */

//printf("Received message #%d: round trip delay(usecs) = %ld\n", i,(ts_end.tv_nsec - ts_current.tv_nsec)/1000);

//printf("%s\n", packet_buf);

}

clock_gettime(CLOCK_MONOTONIC, &ts_end_test);

/* find worst-case latency */

for (i = LATENCY_RANGE - 1; i > 0; i--) {

if (latencies[i] != 0) {

latency_worst_case = i;

break;

}

}

/* WARNING: The average latency calculation is currently being validated */

/* find the average latency */

for (i = LATENCY_RANGE - 1; i > 0; i--) {

/* e.g., if latencies[60] = 17, that means there was a latency of 60us 17 times */

latency_average = latency_average + (latencies[i]*i)/num_msgs;

}

/* old code from using long long instead of double */

/*latency_average = latency_average / num_msgs;*/

/* export the latency measurements to a file */

file_ptr = fopen("histogram.txt", "w");

for (unsigned int i = 0; i < LATENCY_RANGE; i++)

{

fprintf(file_ptr, "%d , ", i);

fprintf(file_ptr, "%d", latencies[i]);

fprintf(file_ptr, "\n");

}

fclose(file_ptr);

printf("\nCommunicated %d messages successfully on %s\n\n",

num_msgs, eptdev_name);

printf("Total execution time for the test: %ld seconds\n",

ts_end_test.tv_sec - ts_start_test.tv_sec);

printf("Average round-trip latency: %f\n", latency_average);

printf("Worst-case round-trip latency: %d\n", latency_worst_case);

printf("Histogram data at histogram.txt\n");

out:

ret = rpmsg_char_close(rcdev);

if (ret < 0)

perror("Can't delete the endpoint device\n");

return ret;

}

void usage()

{

printf("Usage: rpmsg_char_simple [-r <rproc_id>] [-n <num_msgs>] [-d \

<rpmsg_dev_name] [-p <remote_endpt] [-l <local_endpt] \n");

printf("\t\tDefaults: rproc_id: 0 num_msgs: %d rpmsg_dev_name: NULL remote_endpt: %d\n",

NUM_ITERATIONS, REMOTE_ENDPT);

}

int main(int argc, char *argv[])

{

int ret, status, c;

int rproc_id = 0;

int num_msgs = NUM_ITERATIONS;

unsigned int remote_endpt = REMOTE_ENDPT;

unsigned int local_endpt = RPMSG_ADDR_ANY;

char *dev_name = NULL;

while (1) {

c = getopt(argc, argv, "r:n:p:d:l:");

if (c == -1)

break;

switch (c) {

case 'r':

rproc_id = atoi(optarg);

break;

case 'n':

num_msgs = atoi(optarg);

break;

case 'p':

remote_endpt = atoi(optarg);

break;

case 'd':

dev_name = optarg;

break;

case 'l':

local_endpt = atoi(optarg);

break;

default:

usage();

exit(0);

}

}

if (rproc_id < 0 || rproc_id >= RPROC_ID_MAX) {

printf("Invalid rproc id %d, should be less than %d\n",

rproc_id, RPROC_ID_MAX);

usage();

return 1;

}

/* Use auto-detection for SoC */

ret = rpmsg_char_init(NULL);

if (ret) {

printf("rpmsg_char_init failed, ret = %d\n", ret);

return ret;

}

status = rpmsg_char_ping(rproc_id, dev_name, local_endpt, remote_endpt, num_msgs);

rpmsg_char_exit();

if (status < 0) {

printf("TEST STATUS: FAILED\n");

} else {

printf("TEST STATUS: PASSED\n");

}

return 0;

}

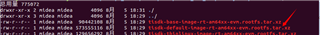

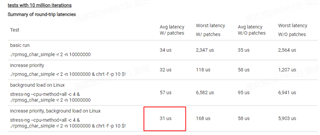

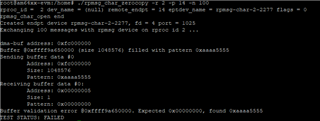

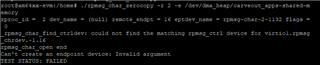

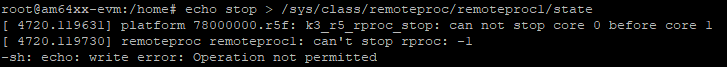

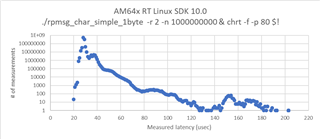

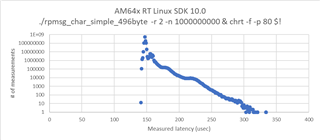

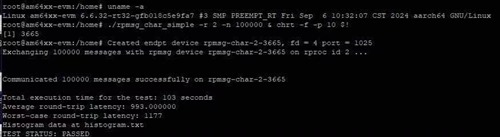

Tested more than 100,000 times and set the priority with the command ./rpmsg_char_simple -r 2 -n 100000 & chrt -f -p 10 $!. The test results are shown in the following figure:

From the figure, it can be seen that the maximum latency is 1177us, which is too high and does not meet our current requirements. Is there room for optimization?