Tool/software:

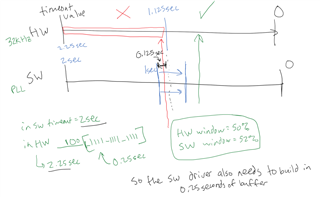

According to the AM62x technical reference manual, it is possible to configure the RTIx_WWDSIZECTRL register to a value of 0x00000005 (default) and the watchdog will function as a standard timeout digital watchdog. However, the Linux watchdog driver for the K3 RTI module is only capable of configuring a 50% open/close window as the largest window. Is this a limitation of the driver implementation or an actual hardware limitation?

I have tried making changes to the driver to support a 100% window and have noticed that the watchdog fails to reset the system when it expires. This is despite the RTIx_WDSTATUS register containing the value 0x32 indicating that there is a timing violation on the end-time.