SK-AM62A-LP

SK-AM62A-LP Tool/software:

Hello,

I wanted to ask that the model artifact generation script that is available on github(onnxrt_ep.py), does it only generate artifacts for one output(final node)?

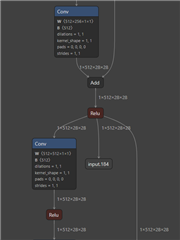

Because my model contains two outputs, one of them is from an intermediate layer.

So, when I run this line of code :

the output_names I get are ['output', 'input.332']

but when I run this line of code :

The outputs I get are [None, array(valid)].

How can I generetae it for both these outputs?

I have also attached an image to show the intermediate output.

Thank you