Tool/software:

Hello there TI Experts,

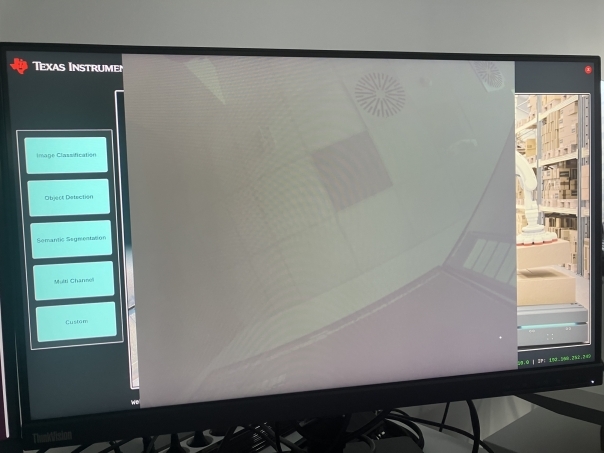

I am trying to use the the edgeai_tiovx_apps with my own imx568 Linux camera driver. When I launch the application I get an image like this:

As you can see it shows me something from the reality but its quite distorted. It looks like the byte order or something like that is messed up in the buffers.

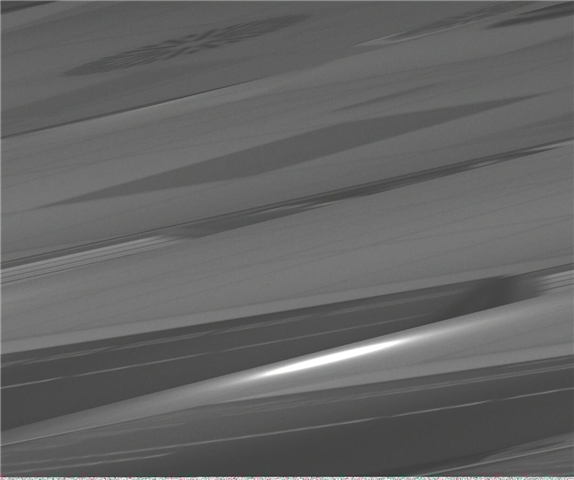

In my case if I use v4l2-ctrl the image is perfectly captured. So it seems like the tiovx pipeline messes up something:

$ v4l2-ctl -d /dev/video-imx568-cam0 --set-fmt-video=width=1236,height=1032,pixelformat=RG12 --stream-mmap --stream-skip=10 --stream-count=1 --stream-to=testStream1236.raw

My format in this case is:

IMX568_CAM_FMT="${IMX568_CAM_FMT:-[fmt:SRGGB12_1X12/1236x1032]}"

To make things more interesting: My camera can also operate on 2472x2064 resolution:

IMX568_CAM_FMT="${IMX568_CAM_FMT:-[fmt:SRGGB12_1X12/2472x2064]}"

In this resolution the TIOVX application is working perfectly, just like the v4l2-ctrl. So this problem only occurs with 1236x1032, and only with edgai-apps-stack. The stream is continuous and AEWB seemingly working (stream adapts to the brightness of the room), so I think the problem is not with the DCC files.

Thank you for your help!

Zsombor Szalay