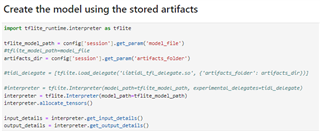

Tool/software:

Hello,

When performing inference on the Cloud or EVM, I consistently observe a very high DDR usage per image, while my colleagues reported only 0 MB two years ago.

I have tried various models on both the Cloud and EVM, and I always receive very high results.

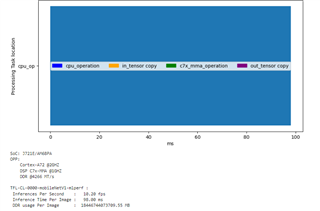

On cloud

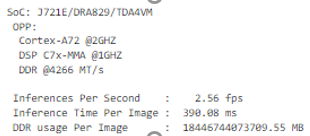

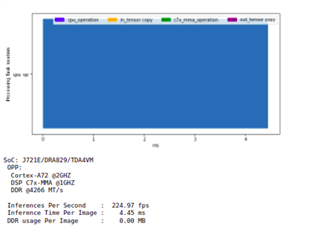

On EVM

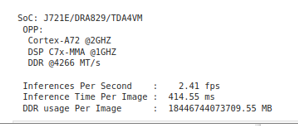

Old results with same model :

Are these results typical, or has the calculation method of edgeai_tidl_tools not been updated?

Thanks and regards,

Azer