Other Parts Discussed in Thread: TDA4VH

Tool/software:

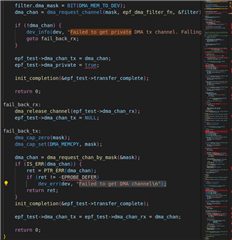

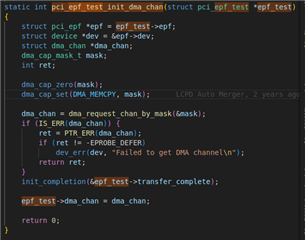

Hello, there is a description of LCPD-37899 on our SDK10, which has solved the speed issue of PCIe. I couldn't find the corresponding modification in our TI kernel. Can you help confirm which modification it is?

We look forward to your reply!