Other Parts Discussed in Thread: AM68A, AM69A, AM67A

Tool/software:

Q:

“I have found TI’s model zoo with neural networks that have been validated and benchmarked on AM6xA processor [AM62A, AM67A, AM68A, AM68PA, AM69A or TDA4x SoCs] using C7x AI accelerator."

"I need to train these models for my own application with a dataset that I have collected. How do I do this?”

A:

The above is fairly common question from developers seeking to leverage pre-optimized networks from TI for their own custom application. It is suggested to use some of these models because they have already been analyzed and optimized for runtime latency and accuracy. The models available from TI are pre-trained on common datasets (e.g. COCO or Imagenet1k), but may need retraining to be applied to custom use-case.

For some networks, we have modified the original network architecture to be friendlier to the hardware accelerator used on AM6xA and TDA4x SoCs, the C7xMMA. These modifications are usually to improve accuracy or runtime efficiency on our fixed-point accelerator. For example, replacing a SiLU activation function with ReLU is far faster in fixed-point. We denote these modified architectures by including “lite” or “TI lite” in the model name within our benchmarks or model zoo repo.

With recent operator support, some original models may be supported as-is, where they were not before. Please see our list of supported operators/layers (and any related restrictions) for the latest information, and do note this documentation is version-specified with respect to our SDK.

In subsequent responses, I’ll describe and categorize models within our model zoo as it pertains to retraining and reuse.

Links and Repositories of note:

- edgeai-tensorlab

- hosts several programming tools for model training, optimization, compilation and benchmarking

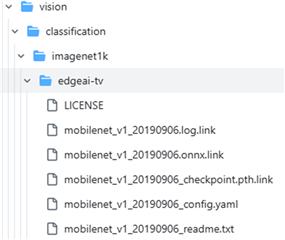

- edgeai-modelzoo

- Part of tensorlab, hosting links and files for the modelzoo, including some pregenerated model artifacts. This was previously a separate repo (prior to July 2024), so if you find an archived version of edgeai-modelzoo on github, it is likely out of date.

- Edge AI Studio

- Online, GUI-based tool for training models, viewing pregenerated benchmarks, and other AI model development tasks.

- See the Model Selection tool for pre-generated benchmarks

- edgeai-tidl-tools

- standalone tools for compiling a trained model that is exported into ONNX or TFLITE format

Please note I have embedded plenty of links into the text for your benefit. Most of these links will reach into the edgeai-tensorlab or edgeai-tidl-tools repositories.

TL;DR (too long, didn’t read): TI has a set of AI models that have been validated and benchmarked on our SOCs. These can be retrained, but not all architectures have a full set of examples and programming tools from TI for doing retraining yourself. In this FAQ, I’ll talk about the available resources and necessary knowledge to own this process for your design. Some architectures are modified and labelled “TI Lite” to denote that the architecture is not identical to the original version. Others are pulled by TI as-is. TI-Lite models require some optimizations that TI hard performed to be similarly done as part of training; unmodified architectures have limited support from TI for training (but compilation with TIDL tools has e2e support)