Tool/software:

I have developed a sequence model that does not use images as input.

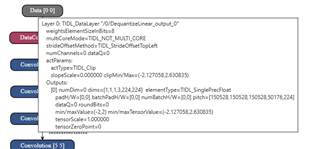

Therefore, the network input is in float format.

However, TI's default deep learning architecture is designed for image-based models.

To input float values into the model in this environment, I used the following method:

I checked the range of the input values and normalized them to fit within the range of 0 to 255.

For example, if the range of the input float values is 0 to 1, I set the std in the conversion settings to 1/255.

Then, in the C code, I multiplied the input float values by 255 before feeding them into the network.

This works correctly because the std of 1/255 was applied in the configuration (cfg).

However, the 8-bit representation did not meet the range requirements of my float input because the maximum expressible value with 1/255 is limited.

Please let me know if there is any way.