Other Parts Discussed in Thread: TDA4VH, DRA821, TDA4VM

Tool/software:

Hardware:TDA4VH custom board

SDK:ti-processor-sdk-linux-adas-j784s4-evm-09_01_00_06

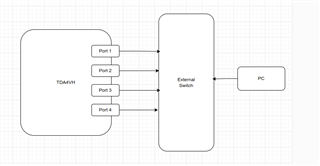

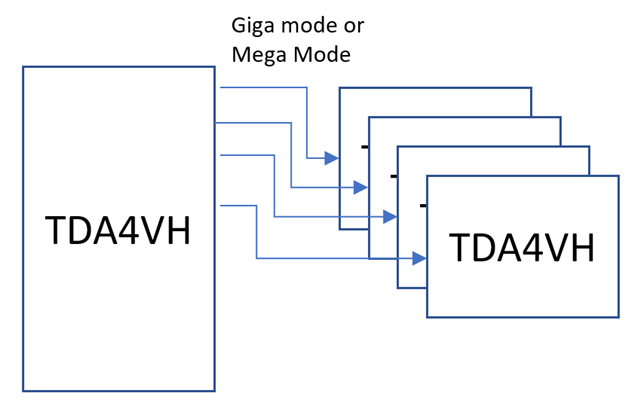

Eight ports are configured with eight independent SERDES lines in SGMII mode

#1. These ports are tested separately using the iperf3 tool. One server(ifconfig eth1 160.0.0.1 && iperf3 -s). one client(iperf3 -c 160.0.0.1 -t 120).

test result of single port:

- The port speed is 93Mb/s under mode of: 100Mb/s Full Duplex.

- The port speed is 943Mb/s under mode of: 1000Mb/s Full Duplex.

The communication throughput meets expectations.

#2. Test multiple ports together, the bandwidth rate dropped a lot, For example, Use four boards as servers.

(iperf3 -s) one client(iperf3 -c 160.0.0.1 -t 120 / iperf3 -c 161.0.0.1 -t 120 / iperf3 -c 162.0.0.1 -t 120 / iperf3 -c 163.0.0.1 -t 120)

test result of multiple ports running at the same time:

- The port speed is 46Mb/s of each at 100Mb/s Full Duplex mode.

- The port speed is 303Mb/s of each at 1000Mb/s Full Duplex mode. total throughput of 4 ports= eth2 + eth3 +eth4 +eth5 = 304 + 304 + 307 + 304 =1219Mb/s.

Although used separate SRDES lines, but it affect each other from test result.

Can each port achieve 1Gbps when use multiple ports at the same time. How to improve the throughput.