Other Parts Discussed in Thread: AM67A, AM68A, TDA4VM

Tool/software:

Hello,

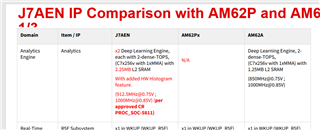

When viewing the comparison between AM62A and TDA4EN, I noticed that TDA4EN supports Histograms while AM62A does not. This leads me to believe that these are 2 different versions of C7x Subsystem:

questions:

1. what are the versions of C7x between these devices (it is not mentioned in the TRM)

2. what other C7x+MMA differences are there between these versions?