Tool/software:

Hi Expert,

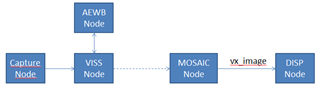

We have an application modified from single cam example. And it has the architecture like this:

figure1

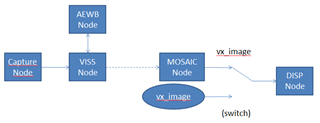

Now we hope to swith display image if application gets special condition, like this:

figure2

For this purpose, we replace the vx_image with one vx_object_array (two vx_images included) in figure1. In app_create_graph(), we put MOSAIC node output to first channel in this new vx_object_array. And we load status condition image to 2nd channel in this new vx_object_array. In app_run_graph(), system will check system status and send TIVX_DISPLAY_SELECT_CHANNEL to switch display image. For testing, we set a abnormal stauts, and we expect it show a status image, but it show a flicking result: system display real camera image & black image ony by one with OPENGL error:

(1270) PVR:(Error): PVRSRVDmaBufImportDevMem error 8 (PVRSRV_ERROR_BAD_MAPPING) [ :101 ] EGL: after eglCreateImageKHR() eglError (0x3003) EGL: ERROR: eglCreateImageKHR failed !!! EGL: ERROR: appEglWindowCreateIMG failed !!! GL: after glDrawArrays() glError (0x506) status=0 updateStatus=3 tivxNodeSendCommand(v) : 0 status=0 updateStatus=3 tivxNodeSendCommand(v) : 0 status=0 updateStatus=3 [ 44.045867] PVR_K:(Error): 1266- 1270: PhysmemImportDmaBuf: Failed to get dma-buf from fd (err=-22) [896] (1270) PVR:(Error): PVRSRVDmaBufImportDevMem error 8 (PVRSRV_ERROR_BAD_MAPPING) [ :101 ] EGL: after eglCreateImageKHR() eglError (0x3003) EGL: ERROR: eglCreateImageKHR failed !!! EGL: ERROR: appEglWindowCreateIMG failed !!! GL: after glDrawArrays() glError (0x506) tivxNodeSendCommand(v) : 0 status=0 updateStatus=3 tivxNodeSendCommand(v) : 0 status=0 updateStatus=3[ 44.112551] PVR_K:(Error): 1266- 1270: PhysmemImportDmaBuf: Failed to get dma-buf from fd (err=-22) [896]

Here is our code from file: app_single_cam_main.c

/*

*

* Copyright (c) 2018 Texas Instruments Incorporated

*

* All rights reserved not granted herein.

*

* Limited License.

*

* Texas Instruments Incorporated grants a world-wide, royalty-free, non-exclusive

* license under copyrights and patents it now or hereafter owns or controls to make,

* have made, use, import, offer to sell and sell ("Utilize") this software subject to the

* terms herein. With respect to the foregoing patent license, such license is granted

* solely to the extent that any such patent is necessary to Utilize the software alone.

* The patent license shall not apply to any combinations which include this software,

* other than combinations with devices manufactured by or for TI ("TI Devices").

* No hardware patent is licensed hereunder.

*

* Redistributions must preserve existing copyright notices and reproduce this license

* (including the above copyright notice and the disclaimer and (if applicable) source

* code license limitations below) in the documentation and/or other materials provided

* with the distribution

*

* Redistribution and use in binary form, without modification, are permitted provided

* that the following conditions are met:

*

* * No reverse engineering, decompilation, or disassembly of this software is

* permitted with respect to any software provided in binary form.

*

* * any redistribution and use are licensed by TI for use only with TI Devices.

*

* * Nothing shall obligate TI to provide you with source code for the software

* licensed and provided to you in object code.

*

* If software source code is provided to you, modification and redistribution of the

* source code are permitted provided that the following conditions are met:

*

* * any redistribution and use of the source code, including any resulting derivative

* works, are licensed by TI for use only with TI Devices.

*

* * any redistribution and use of any object code compiled from the source code

* and any resulting derivative works, are licensed by TI for use only with TI Devices.

*

* Neither the name of Texas Instruments Incorporated nor the names of its suppliers

*

* may be used to endorse or promote products derived from this software without

* specific prior written permission.

*

* DISCLAIMER.

*

* THIS SOFTWARE IS PROVIDED BY TI AND TI'S LICENSORS "AS IS" AND ANY EXPRESS

* OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES

* OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED.

* IN NO EVENT SHALL TI AND TI'S LICENSORS BE LIABLE FOR ANY DIRECT, INDIRECT,

* INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

* BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

* DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY

* OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE

* OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED

* OF THE POSSIBILITY OF SUCH DAMAGE.

*

*/

#include "app_single_cam_main.h"

#include <utils/iss/include/app_iss.h>

#include "app_test.h"

#include <TI/tivx_sample.h>

#include <tivx_sample_kernels_priv.h>

//Jeff: for DISPLAY RESOLUTION

#include <app_display.h>

#ifdef A72

#if defined(LINUX)

/*ITT server is supported only in target mode and only on Linux*/

#include <itt_server.h>

#endif

#endif

#define chmask 0x01 // BIT0

static char availableSensorNames[ISS_SENSORS_MAX_SUPPORTED_SENSOR][ISS_SENSORS_MAX_NAME];

static vx_uint8 num_sensors_found;

static IssSensor_CreateParams sensorParams;

//extern unsigned char Sensordirection[ISS_SENSORS_MAX_CHANNEL];

int updateStatus = U_DONOTHING;

#define NUM_CAPT_CHANNELS (4u)

// for detect camera plug or not

//int32_t appCheckCameraPlug (uint32_t channel_mask);

//uint8_t TTE_detectSensor(vx_context context , vx_node capture_node, uint32_t *ch_mask, uint8_t CheckChannelMask);

//check if ok for honda. only support 1, 2, 3, 5

//int is_belong_to_correct_camera_id(int n)

//{

// return (n == 1 || n == 2 || n == 3 || n == 5);

//}

//int updateStatus=U_DONOTHING;

//==========================

/*

* Utility API used to add a graph parameter from a node, node parameter index

*/

void add_graph_parameter_by_node_index(vx_graph graph, vx_node node, vx_uint32 node_parameter_index)

{

vx_parameter parameter = vxGetParameterByIndex(node, node_parameter_index);

vxAddParameterToGraph(graph, parameter);

vxReleaseParameter(¶meter);

}

vx_status app_init(AppObj *obj)

{

vx_status status = VX_SUCCESS;

char* sensor_list[ISS_SENSORS_MAX_SUPPORTED_SENSOR];

vx_uint8 count = 0;

char ch = 0xFF;

vx_uint8 selectedSensor = 0xFF;

vx_uint8 detectedSensors[ISS_SENSORS_MAX_CHANNEL];

#if MULTI_CHANNEL_DISPLAY

vx_image viTemplate;

#endif

#ifdef A72

#if defined(LINUX)

/*ITT server is supported only in target mode and only on Linux*/

status = itt_server_init((void*)obj, (void*)save_debug_images, (void*)appSingleCamUpdateVpacDcc);

if (status != VX_SUCCESS) {

printf("Warning : Failed to initialize ITT server. Live tuning will not work \n");

}

#endif

#endif

/* Setup Sensors */

for (count=0; count<ISS_SENSORS_MAX_CHANNEL; count++) {

detectedSensors[count] = 0xFF;

}

for (count=0; count<ISS_SENSORS_MAX_SUPPORTED_SENSOR; count++) {

sensor_list[count] = NULL;

}

obj->stop_task = 0;

obj->stop_task_done = 0;

obj->selectedCam = 0xFF;

obj->sensor_features_enabled=0;

if (status == VX_SUCCESS) {

obj->context = vxCreateContext();

status = vxGetStatus((vx_reference) obj->context);

}

/* Prepare firmware update panels */

if (status == VX_SUCCESS) {

obj->viMsg = vxCreateImage(obj->context, 3840, 2160, VX_DF_IMAGE_RGB);

status = preload_status_msg(obj);

}

if (status == VX_SUCCESS) {

tivxHwaLoadKernels(obj->context);

tivxImagingLoadKernels(obj->context);

APP_PRINTF("tivxImagingLoadKernels done\n");

tivxSampleLoadKernels(obj->context);

tivxRegisterSampleTargetA72Kernels();

}

/*memset(availableSensorNames, 0, ISS_SENSORS_MAX_SUPPORTED_SENSOR*ISS_SENSORS_MAX_NAME);*/

for (count=0;count<ISS_SENSORS_MAX_SUPPORTED_SENSOR;count++) {

availableSensorNames[count][0] = '\0';

sensor_list[count] = availableSensorNames[count];

}

if (status == VX_SUCCESS) {

status = appEnumerateImageSensor(sensor_list, &num_sensors_found);

}

if (obj->is_interactive) {

selectedSensor = 0xFF;

obj->selectedCam = 0xFF;

while (obj->selectedCam == 0xFF) {

printf("Select camera port index 0-%d : ", ISS_SENSORS_MAX_CHANNEL-1);

ch = getchar();

obj->selectedCam = ch - '0';

if (obj->selectedCam >= ISS_SENSORS_MAX_CHANNEL) {

printf("Invalid entry %c. Please choose between 0 and %d \n", ch, ISS_SENSORS_MAX_CHANNEL-1);

obj->selectedCam = 0xFF;

}

while ((obj->selectedCam != 0xFF) && (selectedSensor > (num_sensors_found-1))) {

printf("%d registered sensor drivers\n", num_sensors_found);

for (count=0;count<num_sensors_found;count++) {

printf("%c : %s \n", count+'a', sensor_list[count]);

}

printf("Select a sensor above or press '0' to autodetect the sensor : ");

ch = getchar();

if (ch == '0') {

uint8_t num_sensors_detected = 0;

uint8_t channel_mask = (1<<obj->selectedCam);

status = appDetectImageSensor(detectedSensors, &num_sensors_detected, channel_mask);

if (VX_SUCCESS == status) {

selectedSensor = detectedSensors[obj->selectedCam];

if (selectedSensor > ISS_SENSORS_MAX_SUPPORTED_SENSOR) {

printf("No sensor detected at port %d. Please select another port \n", obj->selectedCam);

obj->selectedCam = 0xFF;

selectedSensor = 0xFF;

}

} else {

printf("sensor detection at port %d returned error . Please try again \n", obj->selectedCam);

obj->selectedCam = 0xFF;

selectedSensor = 0xFF;

}

} else {

selectedSensor = ch - 'a';

if (selectedSensor > (num_sensors_found-1)) {

printf("Invalid selection %c. Try again \n", ch);

}

}

}

}

obj->ch_mask=obj->selectedCam;

obj->sensor_name = sensor_list[selectedSensor];

printf("Sensor selected : %s\n", obj->sensor_name);

ch = 0xFF;

fflush (stdin);

#if 0

while ((ch != '0') && (ch != '1')) {

fflush (stdin);

printf ("LDC Selection Yes(1)/No(0) : ");

ch = getchar();

}

obj->ldc_enable = ch - '0';

#endif

//Jeff: force to not use ldc

obj->ldc_enable = 0;

} else {

selectedSensor = obj->sensor_sel;

if (selectedSensor > (num_sensors_found-1)) {

printf("Invalid sensor selection %d \n", selectedSensor);

return VX_FAILURE;

}

obj->selectedCam = 0;

obj->sensor_name = sensor_list[selectedSensor];

printf("Sensor selected : %s\n", obj->sensor_name);

}

obj->sensor_wdr_mode = 1;

obj->table_width = LDC_TABLE_WIDTH;

obj->table_height = LDC_TABLE_HEIGHT;

obj->ds_factor = LDC_DS_FACTOR;

#if MULTI_CHANNEL_DISPLAY

/* Create multi-channel output buffers for display */

viTemplate = vxCreateImage(obj->context, DISPLAY_OUT_WIDTH, DISPLAY_OUT_HEIGHT, VX_DF_IMAGE_RGBX);

obj->vaOutput_image = vxCreateObjectArray(

obj->context,

(vx_reference)viTemplate,

NUM_DISP_CHANNEL);

status = vxGetStatus((vx_reference)(obj->vaOutput_image));

APP_PRINTF("\e[1;33m Create image array for display \e[0m: %d\n", status);

vxReleaseImage(&viTemplate);

#endif

/* Display initialization */

memset(&obj->display_params, 0, sizeof(tivx_display_params_t));

obj->display_params.opMode = TIVX_KERNEL_DISPLAY_ZERO_BUFFER_COPY_MODE;

obj->display_params.pipeId = 2;

obj->display_params.outHeight = DISPLAY_OUT_HEIGHT;

obj->display_params.outWidth = DISPLAY_OUT_WIDTH;

obj->display_params.posX = 0;

obj->display_params.posY = 0;

appPerfPointSetName(&obj->total_perf , "TOTAL");

return status;

}

vx_status app_deinit(AppObj *obj) {

vx_status status = VX_FAILURE;

tivxUnRegisterSampleTargetA72Kernels();

tivxSampleUnLoadKernels(obj->context);

tivxHwaUnLoadKernels(obj->context);

APP_PRINTF("tivxHwaUnLoadKernels done\n");

tivxImagingUnLoadKernels(obj->context);

APP_PRINTF("tivxImagingUnLoadKernels done\n");

status = vxReleaseContext(&obj->context);

if (VX_SUCCESS == status) {

APP_PRINTF("vxReleaseContext done\n");

} else {

printf("Error: vxReleaseContext returned 0x%x \n", status);

}

return status;

}

/*

* Graph,

* viss_config

* |

* v

* input_img -> VISS -----> LDC -----> output_img

*

*/

vx_status app_create_graph(AppObj *obj) {

vx_status status = VX_SUCCESS;

int32_t sensor_init_status = -1;

obj->configuration = NULL;

obj->raw = NULL;

obj->y12 = NULL;

obj->uv12_c1 = NULL;

//obj->y8_r8_c2 = NULL;

obj->uv8_g8_c3 = NULL;

obj->s8_b8_c4 = NULL;

obj->histogram = NULL;

obj->h3a_aew_af = NULL;

obj->display_image = NULL;

unsigned int image_width = obj->width_in;

unsigned int image_height = obj->height_in;

tivx_raw_image raw_image = 0;

vx_user_data_object capture_config;

vx_uint8 num_capture_frames = 1;

tivx_capture_params_t local_capture_config;

uint32_t buf_id;

const vx_char capture_user_data_object_name[] = "tivx_capture_params_t";

uint32_t sensor_features_enabled = 0;

uint32_t sensor_features_supported = 0;

uint32_t sensor_wdr_enabled = 0;

uint32_t sensor_exp_control_enabled = 0;

uint32_t sensor_gain_control_enabled = 0;

obj->yuv_cam_input = vx_false_e;

//uint32_t img_fmt;

//vx_image viss_out_image = NULL;

//vx_image ldc_in_image = NULL;

vx_image capt_yuv_image = NULL;

vx_image capt_nv12_image = NULL;

uint8_t channel_mask = (1<<obj->selectedCam);

vx_uint32 params_list_depth = 4;

// if (obj->test_mode == 1) {

// params_list_depth++;

// }

vx_graph_parameter_queue_params_t graph_parameters_queue_params_list[params_list_depth];

printf("Querying %s \n", obj->sensor_name);

memset(&sensorParams, 0, sizeof(sensorParams));

status = appQueryImageSensor(obj->sensor_name, &sensorParams);

//if (VX_SUCCESS != status) {

//printf("appQueryImageSensor returned %d\n", status);

//return status;

// }

if (sensorParams.sensorInfo.raw_params.format[0].pixel_container == VX_DF_IMAGE_UYVY) {

obj->yuv_cam_input = vx_true_e;

printf("YUV Input selected. VISS and AEWB nodes will be bypassed. \n");

}

//Jeff: ask camera id for Honda

uint32_t cameraID = 0;

status = appReadRegImageSensor(obj->sensor_name, channel_mask, 0xA0, &cameraID);

printf("read camera id, return %d, id:%d\n", status, cameraID);

//if (!is_belong_to_correct_camera_id(cameraID))

//{

// cameraID = 1;

// printf("camera id is set to %d\n", cameraID);

//}

obj->CameraID = cameraID;

//img_fmt = sensorParams.sensorInfo.raw_params.format[0].pixel_container;

/*

Check for supported sensor features.

It is upto the application to decide which features should be enabled.

This demo app enables WDR, DCC and 2A if the sensor supports it.

*/

sensor_features_supported = sensorParams.sensorInfo.features;

//Jeff add RGB cam input

if (vx_false_e == obj->yuv_cam_input) {

if (ISS_SENSOR_FEATURE_COMB_COMP_WDR_MODE == (sensor_features_supported & ISS_SENSOR_FEATURE_COMB_COMP_WDR_MODE)) {

APP_PRINTF("WDR mode is supported \n");

sensor_features_enabled |= ISS_SENSOR_FEATURE_COMB_COMP_WDR_MODE;

sensor_wdr_enabled = 1;

obj->sensor_wdr_mode = 1;

} else {

APP_PRINTF("WDR mode is not supported. Defaulting to linear \n");

sensor_features_enabled |= ISS_SENSOR_FEATURE_LINEAR_MODE;

sensor_wdr_enabled = 0;

obj->sensor_wdr_mode = 0;

}

if (ISS_SENSOR_FEATURE_MANUAL_EXPOSURE == (sensor_features_supported & ISS_SENSOR_FEATURE_MANUAL_EXPOSURE)) {

APP_PRINTF("Expsoure control is supported \n");

sensor_features_enabled |= ISS_SENSOR_FEATURE_MANUAL_EXPOSURE;

sensor_exp_control_enabled = 1;

}

if (ISS_SENSOR_FEATURE_MANUAL_GAIN == (sensor_features_supported & ISS_SENSOR_FEATURE_MANUAL_GAIN)) {

APP_PRINTF("Gain control is supported \n");

sensor_features_enabled |= ISS_SENSOR_FEATURE_MANUAL_GAIN;

sensor_gain_control_enabled = 1;

}

if (ISS_SENSOR_FEATURE_CFG_UC1 == (sensor_features_supported & ISS_SENSOR_FEATURE_CFG_UC1)) {

APP_PRINTF("CMS Usecase is supported \n");

sensor_features_enabled |= ISS_SENSOR_FEATURE_CFG_UC1;

}

switch (sensorParams.sensorInfo.aewbMode) {

case ALGORITHMS_ISS_AEWB_MODE_NONE:

obj->aewb_cfg.ae_mode = ALGORITHMS_ISS_AE_DISABLED;

obj->aewb_cfg.awb_mode = ALGORITHMS_ISS_AWB_DISABLED;

break;

case ALGORITHMS_ISS_AEWB_MODE_AWB:

obj->aewb_cfg.ae_mode = ALGORITHMS_ISS_AE_DISABLED;

obj->aewb_cfg.awb_mode = ALGORITHMS_ISS_AWB_AUTO;

break;

case ALGORITHMS_ISS_AEWB_MODE_AE:

obj->aewb_cfg.ae_mode = ALGORITHMS_ISS_AE_AUTO;

obj->aewb_cfg.awb_mode = ALGORITHMS_ISS_AWB_DISABLED;

break;

case ALGORITHMS_ISS_AEWB_MODE_AEWB:

obj->aewb_cfg.ae_mode = ALGORITHMS_ISS_AE_AUTO;

obj->aewb_cfg.awb_mode = ALGORITHMS_ISS_AWB_AUTO;

break;

}

if (obj->aewb_cfg.ae_mode == ALGORITHMS_ISS_AE_DISABLED) {

if (sensor_exp_control_enabled || sensor_gain_control_enabled ) {

obj->aewb_cfg.ae_mode = ALGORITHMS_ISS_AE_MANUAL;

}

}

// keven Add 20250204

sensorParams.sensorInfo.aewbMode = ALGORITHMS_ISS_AEWB_MODE_AEWB;

obj->aewb_cfg.ae_mode = ALGORITHMS_ISS_AE_AUTO;

obj->aewb_cfg.awb_mode = ALGORITHMS_ISS_AWB_AUTO;

// keven Add 20250204

APP_PRINTF("obj->aewb_cfg.ae_mode = %d\n", obj->aewb_cfg.ae_mode);

APP_PRINTF("obj->aewb_cfg.awb_mode = %d\n", obj->aewb_cfg.awb_mode);

}

if (ISS_SENSOR_FEATURE_DCC_SUPPORTED == (sensor_features_supported & ISS_SENSOR_FEATURE_DCC_SUPPORTED)) {

sensor_features_enabled |= ISS_SENSOR_FEATURE_DCC_SUPPORTED;

APP_PRINTF("Sensor DCC is enabled \n");

} else {

APP_PRINTF("Sensor DCC is NOT enabled \n");

}

obj->sensor_features_enabled=sensor_features_enabled;

APP_PRINTF("Sensor width = %d\n", sensorParams.sensorInfo.raw_params.width);

APP_PRINTF("Sensor height = %d\n", sensorParams.sensorInfo.raw_params.height);

APP_PRINTF("Sensor DCC ID = %d\n", sensorParams.dccId);

APP_PRINTF("Sensor Supported Features = 0x%x\n", sensor_features_supported);

APP_PRINTF("Sensor Enabled Features = 0x%x\n", sensor_features_enabled);

sensor_init_status = appInitImageSensor(obj->sensor_name, sensor_features_enabled, channel_mask);/*Mask = 1 for camera # 0*/

if (0 != sensor_init_status) {

/* Not returning failure because application may be waiting for

error/test frame */

printf("Error initializing sensor %s \n", obj->sensor_name);

}

image_width = sensorParams.sensorInfo.raw_params.width;

image_height = sensorParams.sensorInfo.raw_params.height;

obj->cam_dcc_id = sensorParams.dccId;

obj->width_in = image_width;

obj->height_in = image_height;

/*

Assuming same dataformat for all exposures.

This may not be true for staggered HDR. WIll be handled later

for(count = 0;count<raw_params.num_exposures;count++)

{

memcpy(&(raw_params.format[count]), &(sensorProperties.sensorInfo.dataFormat), sizeof(tivx_raw_image_format_t));

}

*/

/*

Sensor driver does not support metadata yet.

*/

APP_PRINTF("Creating graph \n");

obj->graph = vxCreateGraph(obj->context);

if (status == VX_SUCCESS) {

status = vxGetStatus((vx_reference) obj->graph);

}

APP_ASSERT(vx_true_e == tivxIsTargetEnabled(TIVX_TARGET_VPAC_VISS1));

APP_PRINTF("Initializing params for capture node \n");

/* Setting to num buf of capture node */

obj->num_cap_buf = NUM_BUFS;

if (vx_false_e == obj->yuv_cam_input) {

APP_PRINTF("Creating raw image for capture \n");

raw_image = tivxCreateRawImage(obj->context, &sensorParams.sensorInfo.raw_params);

/* allocate Input and Output refs, multiple refs created to allow pipelining of graph */

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++) {

if (status == VX_SUCCESS) {

obj->cap_frames[buf_id] = vxCreateObjectArray(obj->context, (vx_reference)raw_image, num_capture_frames);

status = vxGetStatus((vx_reference) obj->cap_frames[buf_id]);

}

}

} else {

APP_PRINTF("Creating image for capture \n");

capt_yuv_image = vxCreateImage(

obj->context,

sensorParams.sensorInfo.raw_params.width,

sensorParams.sensorInfo.raw_params.height,

//img_fmt

VX_DF_IMAGE_UYVY

);

/* allocate Input and Output refs, multiple refs created to allow pipelining of graph */

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++) {

if (status == VX_SUCCESS) {

obj->cap_frames[buf_id] = vxCreateObjectArray(obj->context, (vx_reference)capt_yuv_image, num_capture_frames);

status = vxGetStatus((vx_reference) obj->cap_frames[buf_id]);

}

}

}

/* Config initialization */

tivx_capture_params_init(&local_capture_config);

local_capture_config.timeout = 33;

local_capture_config.timeoutInitial = 500;

//Jeff, set only for MAX96718F with only one camera

//Jeff: force to use csi1 for handa

obj->use_csi1 = 1;

if (obj->use_csi1 == 1) {

local_capture_config.numInst = 1U;/* Configure both instances */

local_capture_config.numCh = 1U;/* Single cam. Only 1 channel enabled */

local_capture_config.instId[0] = 1; //use CSI1

local_capture_config.instCfg[0].enableCsiv2p0Support = (uint32_t)vx_true_e;

local_capture_config.instCfg[0].numDataLanes = sensorParams.sensorInfo.numDataLanes;

local_capture_config.instCfg[0].laneBandSpeed = sensorParams.sensorInfo.csi_laneBandSpeed;

vx_uint8 id;

for (id = 0; id < local_capture_config.instCfg[0].numDataLanes; id++) {

local_capture_config.instCfg[0].dataLanesMap[id] = id + 1;

}

for (id = 0; id < 4; id++) {

local_capture_config.chVcNum[id] = id;

local_capture_config.chInstMap[id] = 1;

}

} else /* if use_csi1 == 0 */ {

local_capture_config.numInst = 2U;/* Configure both instances */

local_capture_config.numCh = 1U;/* Single cam. Only 1 channel enabled */

{

vx_uint8 ch, id, lane, q;

for (id = 0; id < local_capture_config.numInst; id++) {

local_capture_config.instId[id] = id;

local_capture_config.instCfg[id].enableCsiv2p0Support = (uint32_t)vx_true_e;

local_capture_config.instCfg[id].numDataLanes = sensorParams.sensorInfo.numDataLanes;

local_capture_config.instCfg[id].laneBandSpeed = sensorParams.sensorInfo.csi_laneBandSpeed;

for (lane = 0; lane < local_capture_config.instCfg[id].numDataLanes; lane++) {

local_capture_config.instCfg[id].dataLanesMap[lane] = lane + 1;

}

for (q = 0; q < NUM_CAPT_CHANNELS; q++) {

ch = (NUM_CAPT_CHANNELS-1)* id + q;

local_capture_config.chVcNum[ch] = q;

local_capture_config.chInstMap[ch] = id;

}

}

}

local_capture_config.chInstMap[0] = obj->selectedCam/NUM_CAPT_CHANNELS;

local_capture_config.chVcNum[0] = obj->selectedCam%NUM_CAPT_CHANNELS;

}

capture_config = vxCreateUserDataObject(obj->context, capture_user_data_object_name, sizeof(tivx_capture_params_t), &local_capture_config);

APP_PRINTF("capture_config = 0x%p \n", capture_config);

APP_PRINTF("Creating capture node \n");

obj->capture_node = tivxCaptureNode(

obj->graph,

capture_config,

obj->cap_frames[0]);

APP_PRINTF("obj->capture_node = 0x%p \n", obj->capture_node);

if (status == VX_SUCCESS) {

status = vxReleaseUserDataObject(&capture_config);

}

if (status == VX_SUCCESS) {

status = vxSetNodeTarget(obj->capture_node, VX_TARGET_STRING, TIVX_TARGET_CAPTURE2);

}

capt_nv12_image = vxCreateImage(obj->context, image_width, image_height, VX_DF_IMAGE_NV12);

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++) {

obj->mosaic_frames[buf_id] = vxCreateObjectArray(obj->context, (vx_reference)capt_nv12_image, 4);

//obj->y8_r8_c2[buf_id] = (vx_image) vxGetObjectArrayItem(obj->mosaic_frames[buf_id], 0);

}

vxReleaseImage(&capt_nv12_image);

if (vx_false_e == obj->yuv_cam_input) {

obj->raw = (tivx_raw_image)vxGetObjectArrayItem(obj->cap_frames[0], 0);

if (status == VX_SUCCESS) {

status = tivxReleaseRawImage(&raw_image);

}

#ifdef _APP_DEBUG_

obj->fs_test_raw_image = tivxCreateRawImage(obj->context, &(sensorParams.sensorInfo.raw_params));

if (NULL != obj->fs_test_raw_image) {

if (status == VX_SUCCESS) {

status = read_test_image_raw(NULL, obj->fs_test_raw_image, obj->test_mode);

} else {

status = tivxReleaseRawImage(&obj->fs_test_raw_image);

obj->fs_test_raw_image = NULL;

}

}

#endif //_APP_DEBUG_

status = app_create_viss(obj, sensor_wdr_enabled);

if (VX_SUCCESS == status) {

vxSetNodeTarget(obj->node_viss, VX_TARGET_STRING, TIVX_TARGET_VPAC_VISS1);

//tivxSetNodeParameterNumBufByIndex(obj->node_viss, 6u, obj->num_cap_buf);

} else {

printf("app_create_viss failed \n");

return VX_FAILURE;

}

status = app_create_aewb(obj, sensor_wdr_enabled);

if (VX_SUCCESS != status) {

printf("app_create_aewb failed \n");

return VX_FAILURE;

}

//viss_out_image = obj->y8_r8_c2;

//ldc_in_image = viss_out_image;

} else {

obj->capt_yuv_image = (vx_image)vxGetObjectArrayItem(obj->cap_frames[0], 0);

//ldc_in_image = obj->capt_yuv_image;

vxReleaseImage(&capt_yuv_image);

}

if (1)

{

obj->fake_yuv_image = vxCreateImage(

obj->context,

sensorParams.sensorInfo.raw_params.width,

sensorParams.sensorInfo.raw_params.height,

VX_DF_IMAGE_NV12);

if (NULL != obj->fake_yuv_image)

{

status = load_input_image(

obj,

obj->fake_yuv_image,

sensorParams.sensorInfo.raw_params.width,

sensorParams.sensorInfo.raw_params.height);

}

}

//add opengl mosaic node for Honda

memset(&obj->opengl_params, 0, sizeof(tivx_opengl_mosaic_params_t));

//if (obj->showFisheye)

obj->opengl_params.renderType = TIVX_KERNEL_OPENGL_MOSAIC_TYPE_1x1; // show Fisheye

//else

//obj->opengl_params.renderType = TIVX_KERNEL_OPENGL_MOSAIC_TYPE_VIEW; // show realCamera

switch(cameraID)

{

case 1:

default:

obj->opengl_params.rect = obj->camera1rect;

break;

case 2:

obj->opengl_params.rect = obj->camera2rect;

break;

case 3:

obj->opengl_params.rect = obj->camera3rect;

break;

case 5:

obj->opengl_params.rect = obj->camera5rect;

break;

}

obj->opengl_params.location = obj->host_location;

obj->opengl_params.camerID = cameraID;

obj->opengl_param_obj = vxCreateUserDataObject(obj->context, "tivx_opengl_mosaic_params_t", sizeof(tivx_opengl_mosaic_params_t), &obj->opengl_params);

status = vxGetStatus((vx_reference) obj->opengl_param_obj);

#if 0

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++)

obj->mosaic_out_image[buf_id] = vxCreateImage(

obj->context,

obj->display_params.outWidth,

obj->display_params.outHeight, VX_DF_IMAGE_RGBX);

#endif

obj->openglNode = tivxOpenglMosaicNode(

obj->graph,

obj->opengl_param_obj,

obj->mosaic_frames[0],

//obj->mosaic_out_image[0]);

(vx_image)vxGetObjectArrayItem((vx_object_array)obj->vaOutput_image, 0));

if (status == VX_SUCCESS) {

status = vxReleaseUserDataObject(&obj->opengl_param_obj);

}

if (status == VX_SUCCESS) {

status = vxSetNodeTarget(obj->openglNode, VX_TARGET_STRING, TIVX_TARGET_A72_0);

}

//reset display_image to mosaic result image

//obj->display_image = obj->mosaic_out_image[0];

obj->display_image = (vx_image)vxGetObjectArrayItem((vx_object_array)obj->vaOutput_image, 0); //0);

if (NULL == obj->display_image) {

printf("Error : Display input is uninitialized \n");

return VX_FAILURE;

} else {

#if ENABLE_DISPLAY

obj->display_params.posX = 0;

obj->display_params.posY = 0;

obj->display_param_obj = vxCreateUserDataObject(obj->context, "tivx_display_params_t", sizeof(tivx_display_params_t), &obj->display_params);

obj->displayNode = tivxDisplayNode(

obj->graph,

obj->display_param_obj,

obj->display_image);

#endif

}

#if ENABLE_DISPLAY

if (status == VX_SUCCESS) {

status = vxSetNodeTarget(obj->displayNode, VX_TARGET_STRING, TIVX_TARGET_DISPLAY1);

APP_PRINTF("Display Set Target done\n");

}

#endif

int graph_parameter_num = 0;

/* input @ node index 1, becomes graph parameter 0 */

add_graph_parameter_by_node_index(obj->graph, obj->capture_node, 1);

/* set graph schedule config such that graph parameter @ index 0 is enqueuable */

graph_parameters_queue_params_list[graph_parameter_num].graph_parameter_index = graph_parameter_num;

graph_parameters_queue_params_list[graph_parameter_num].refs_list_size = obj->num_cap_buf;

graph_parameters_queue_params_list[graph_parameter_num].refs_list = (vx_reference*)&(obj->cap_frames[0]);

obj->graph_parameter_num_capt = graph_parameter_num;

graph_parameter_num++;

add_graph_parameter_by_node_index(obj->graph, obj->openglNode, 1);

graph_parameters_queue_params_list[graph_parameter_num].graph_parameter_index = graph_parameter_num;

graph_parameters_queue_params_list[graph_parameter_num].refs_list_size = obj->num_cap_buf;

graph_parameters_queue_params_list[graph_parameter_num].refs_list = (vx_reference*)&(obj->mosaic_frames[0]);

obj->graph_parameter_num_mosaic = graph_parameter_num;

graph_parameter_num++;

#if 0

//firmware update

add_graph_parameter_by_node_index(obj->graph, obj->openglNode, 2);

graph_parameters_queue_params_list[graph_parameter_num].graph_parameter_index = graph_parameter_num;

graph_parameters_queue_params_list[graph_parameter_num].refs_list_size = obj->num_cap_buf;

graph_parameters_queue_params_list[graph_parameter_num].refs_list = (vx_reference*)&(obj->mosaic_out_image[0]);

obj->graph_parameter_num_outsaic = graph_parameter_num;

graph_parameter_num++;

#endif

if (vx_false_e == obj->yuv_cam_input) {

add_graph_parameter_by_node_index(obj->graph, obj->node_viss, 6);

graph_parameters_queue_params_list[graph_parameter_num].graph_parameter_index = graph_parameter_num;

graph_parameters_queue_params_list[graph_parameter_num].refs_list_size = obj->num_cap_buf;

graph_parameters_queue_params_list[graph_parameter_num].refs_list = (vx_reference*)&(obj->y8_r8_c2[0]);

obj->graph_parameter_num_viss = graph_parameter_num;

graph_parameter_num++;

}

if (obj->test_mode == 1) {

#if ENABLE_DISPLAYf

add_graph_parameter_by_node_index(obj->graph, obj->displayNode, 1);

#endif

/* set graph schedule config such that graph parameter @ index 0 is enqueuable */

graph_parameters_queue_params_list[graph_parameter_num].graph_parameter_index = graph_parameter_num;

graph_parameters_queue_params_list[graph_parameter_num].refs_list_size = 1;

graph_parameters_queue_params_list[graph_parameter_num].refs_list = (vx_reference*)&(obj->display_image);

obj->graph_parameter_num_display = graph_parameter_num;

graph_parameter_num++;

}

if (status == VX_SUCCESS) {

status = tivxSetGraphPipelineDepth(obj->graph, obj->num_cap_buf);

}

/* Schedule mode auto is used, here we dont need to call vxScheduleGraph

* Graph gets scheduled automatically as refs are enqueued to it

*/

if (status == VX_SUCCESS) {

status = vxSetGraphScheduleConfig(obj->graph,

VX_GRAPH_SCHEDULE_MODE_QUEUE_AUTO,

graph_parameter_num,

graph_parameters_queue_params_list

);

}

APP_PRINTF("vxSetGraphScheduleConfig done\n");

if (status == VX_SUCCESS) {

status = vxVerifyGraph(obj->graph);

}

if (status == VX_SUCCESS) {

status = tivxExportGraphToDot(obj->graph, ".", "single_cam_graph");

}

#ifdef _APP_DEBUG_

if (vx_false_e == obj->yuv_cam_input) {

if ((NULL != obj->fs_test_raw_image) && (NULL != obj->capture_node) && (status == VX_SUCCESS)) {

status = app_send_test_frame(obj->capture_node, obj->fs_test_raw_image);

}

}

#endif //_APP_DEBUG_

APP_PRINTF("app_create_graph exiting\n");

return status;

}

vx_status app_delete_graph(AppObj *obj) {

uint32_t buf_id;

vx_status status = VX_SUCCESS;

if (obj->capture_node) {

APP_PRINTF("releasing capture node\n");

status |= vxReleaseNode(&obj->capture_node);

}

if (obj->node_viss) {

APP_PRINTF("releasing node_viss\n");

status |= vxReleaseNode(&obj->node_viss);

}

if (obj->node_aewb) {

APP_PRINTF("releasing node_aewb\n");

status |= vxReleaseNode(&obj->node_aewb);

}

#if ENABLE_DISPLAY

if (obj->displayNode) {

APP_PRINTF("releasing displayNode\n");

status |= vxReleaseNode(&obj->displayNode);

}

#endif

if (obj->openglNode) {

APP_PRINTF("releasing openglNode\n");

status |= vxReleaseNode(&obj->openglNode);

}

/* firmware update */

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++) {

if (obj->mosaic_out_image[buf_id]) {

APP_PRINTF("releasing mosaic_frames # %d\n", buf_id);

status |= vxReleaseImage(&(obj->mosaic_out_image[buf_id]));

}

}

status |= tivxReleaseRawImage(&obj->raw);

APP_PRINTF("releasing raw image done\n");

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++) {

if (obj->cap_frames[buf_id]) {

APP_PRINTF("releasing cap_frame # %d\n", buf_id);

status |= vxReleaseObjectArray(&(obj->cap_frames[buf_id]));

}

}

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++) {

if (obj->mosaic_frames[buf_id]) {

APP_PRINTF("releasing mosaic_frames # %d\n", buf_id);

status |= vxReleaseObjectArray(&(obj->mosaic_frames[buf_id]));

}

}

for (buf_id=0; buf_id<obj->num_viss_out_buf; buf_id++) {

if (obj->viss_out_luma[buf_id]) {

APP_PRINTF("releasing y8 buffer # %d\n", buf_id);

status |= vxReleaseImage(&(obj->viss_out_luma[buf_id]));

}

}

#if MULTI_CHANNEL_DISPLAY

if (obj->vaOutput_image)

status |= vxReleaseObjectArray(&obj->vaOutput_image);

#endif

if (obj->capt_yuv_image) {

APP_PRINTF("releasing capt_yuv_image\n");

status |= vxReleaseImage(&obj->capt_yuv_image);

}

if (obj->y12) {

APP_PRINTF("releasing y12\n");

status |= vxReleaseImage(&obj->y12);

}

if (obj->uv12_c1) {

APP_PRINTF("releasing uv12_c1\n");

status |= vxReleaseImage(&obj->uv12_c1);

}

if (obj->s8_b8_c4) {

APP_PRINTF("releasing s8_b8_c4\n");

status |= vxReleaseImage(&obj->s8_b8_c4);

}

if (obj->y8_r8_c2[0]) {

APP_PRINTF("releasing y8_r8_c2\n");

for (int i=0; i<obj->num_cap_buf; i++)

status |= vxReleaseImage(&obj->y8_r8_c2[i]);

}

if (obj->uv8_g8_c3) {

APP_PRINTF("releasing uv8_g8_c3\n");

status |= vxReleaseImage(&obj->uv8_g8_c3);

}

if (obj->histogram) {

APP_PRINTF("releasing histogram\n");

status |= vxReleaseDistribution(&obj->histogram);

}

if (obj->configuration) {

APP_PRINTF("releasing configuration\n");

status |= vxReleaseUserDataObject(&obj->configuration);

}

if (obj->ae_awb_result) {

status |= vxReleaseUserDataObject(&obj->ae_awb_result);

APP_PRINTF("releasing ae_awb_result done\n");

}

if (obj->h3a_aew_af) {

APP_PRINTF("releasing h3a_aew_af\n");

status |= vxReleaseUserDataObject(&obj->h3a_aew_af);

}

if (obj->aewb_config) {

APP_PRINTF("releasing aewb_config\n");

status |= vxReleaseUserDataObject(&obj->aewb_config);

}

if (obj->dcc_param_viss) {

APP_PRINTF("releasing VISS DCC Data Object\n");

status |= vxReleaseUserDataObject(&obj->dcc_param_viss);

}

if (obj->display_param_obj) {

APP_PRINTF("releasing Display Param Data Object\n");

status |= vxReleaseUserDataObject(&obj->display_param_obj);

}

if (obj->dcc_param_2a) {

APP_PRINTF("releasing 2A DCC Data Object\n");

status |= vxReleaseUserDataObject(&obj->dcc_param_2a);

}

if (obj->dcc_param_ldc) {

APP_PRINTF("releasing LDC DCC Data Object\n");

status |= vxReleaseUserDataObject(&obj->dcc_param_ldc);

}

if (obj->ldc_enable) {

if (obj->mesh_img) {

APP_PRINTF("releasing LDC Mesh Image \n");

status |= vxReleaseImage(&obj->mesh_img);

}

if (obj->ldc_out) {

APP_PRINTF("releasing LDC Output Image \n");

status |= vxReleaseImage(&obj->ldc_out);

}

if (obj->mesh_params_obj) {

APP_PRINTF("releasing LDC Mesh Parameters Object\n");

status |= vxReleaseUserDataObject(&obj->mesh_params_obj);

}

if (obj->ldc_param_obj) {

APP_PRINTF("releasing LDC Parameters Object\n");

status |= vxReleaseUserDataObject(&obj->ldc_param_obj);

}

if (obj->region_params_obj) {

APP_PRINTF("releasing LDC Region Parameters Object\n");

status |= vxReleaseUserDataObject(&obj->region_params_obj);

}

if (obj->node_ldc) {

APP_PRINTF("releasing LDC Node \n");

status |= vxReleaseNode(&obj->node_ldc);

}

}

#ifdef _APP_DEBUG_

if (obj->fs_test_raw_image) {

APP_PRINTF("releasing test raw image buffer # %d\n", buf_id);

status |= tivxReleaseRawImage(&obj->fs_test_raw_image);

}

if (obj->fake_yuv_image) {

APP_PRINTF("releasing test yuv image buffer # %d\n", buf_id);

status |= vxReleaseImage(&obj->fake_yuv_image);

}

#endif

APP_PRINTF("Before releasing graph. status=%d\n", status);

status |= vxReleaseGraph(&obj->graph);

APP_PRINTF("releasing graph done\n");

return status;

}

vx_status app_init_sensor_plug(AppObj *obj, char *objName) // for sensor re-plug

{

vx_status status = VX_SUCCESS;

int32_t sensor_init_status = VX_FAILURE;

uint8_t ch_mask = obj->ch_mask;

sensor_init_status = appInitImageSensor(obj->sensor_name, obj->sensor_features_enabled, ch_mask);

if (sensor_init_status) {

// printf("[casperdebug]---------> status =%d , %s , %d \n",sensor_init_status,__FUNCTION__,__LINE__);

printf("Error initializing sensor %s \n", obj->sensor_name);

status = VX_FAILURE;

}

// else printf("[casperdebug]---------> ch_mask =%d , %s , %d \n",ch_mask,__FUNCTION__,__LINE__);

return status;

}

vx_status app_run_graph(AppObj *obj) {

vx_status status = VX_SUCCESS;

vx_uint32 i;

vx_uint32 frm_loop_cnt;

//static uint32_t DetSDCardCnt;

uint32_t buf_id;

uint32_t num_refs_capture;

vx_object_array out_capture_frames;

vx_object_array in_mosaic_frames;

//vx_image out_mosaic_image;

vx_image out_viss_image;

vx_reference vrRefs[1];

tivx_display_select_channel_params_t stChannel_prms;

vx_user_data_object voSwitch_ch_obj;

updateStatus=3;

printf("========================updateStatus=3=======================\n");

#if 0

static uint32_t DetSDCardCnt;

#endif

int graph_parameter_num = 0;

uint64_t cameraDetect=0;

uint8_t channel_mask = (1<<obj->selectedCam);

if (NULL == obj->sensor_name) {

printf("sensor name is NULL \n");

return VX_FAILURE;

}

status = appStartImageSensor(obj->sensor_name, channel_mask);

if (status < 0) {

printf("Failed to start sensor %s \n", obj->sensor_name);

if (NULL != obj->fs_test_raw_image) {

printf("Defaulting to file test mode \n");

status = 0;

}

}

for (buf_id=0; buf_id<obj->num_cap_buf; buf_id++) {

if (status == VX_SUCCESS) {

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_capt, (vx_reference*)&(obj->cap_frames[buf_id]), 1);

}

if ((status == VX_SUCCESS) && (obj->yuv_cam_input == vx_false_e)) {

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_viss, (vx_reference*)&(obj->y8_r8_c2[buf_id]), 1);

}

if (status == VX_SUCCESS) {

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_mosaic, (vx_reference*)&(obj->mosaic_frames[buf_id]), 1);

}

#if 0

/* firmware update */

if (status == VX_SUCCESS) {

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_outsaic, (vx_reference*)&(obj->mosaic_out_image[buf_id]), 1);

}

#endif

#if 0

/* in order for the graph to finish execution, the

display still needs to be enqueued 4 times for testing */

if ((status == VX_SUCCESS) && (obj->test_mode == 1)) {

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_display, (vx_reference*)&(obj->display_image), 1);

}

#endif

}

/*

The application reads and processes the same image "frm_loop_cnt" times

The output may change because on VISS, parameters are updated every frame based on AEWB results

AEWB result is avaialble after 1 frame and is applied after 2 frames

Therefore, first 2 output images will have wrong colors

*/

frm_loop_cnt = obj->num_frames_to_run;

frm_loop_cnt += obj->num_cap_buf;

if (obj->is_interactive) {

/* in interactive mode loop for ever */

frm_loop_cnt = 0xFFFFFFFF;

}

#ifdef A72

#if defined(LINUX)

if (obj->node_ldc != NULL) {

appDccUpdatefromFS(obj->sensor_name, obj->sensor_wdr_mode,

obj->node_aewb, 0,

obj->node_viss, 0,

obj->node_ldc, 0,

obj->context);

}

#endif

#endif

for (i=0; i<frm_loop_cnt; i++) {

vx_image test_image;

appPerfPointBegin(&obj->total_perf);

//Jeff: disable for testing

#if 0 //fa

DetSDCardCnt++;

if (DetSDCardCnt > 20)

{

app_DetSDCard(obj);

DetSDCardCnt=0;

}

#endif

if (status == VX_SUCCESS) {

status = vxGraphParameterDequeueDoneRef(obj->graph, obj->graph_parameter_num_capt, (vx_reference*)&out_capture_frames, 1, &num_refs_capture);

}

if ((status == VX_SUCCESS) && (obj->yuv_cam_input == vx_false_e)) {

status = vxGraphParameterDequeueDoneRef(obj->graph, obj->graph_parameter_num_viss, (vx_reference*)&out_viss_image, 1, &num_refs_capture);

}

if (status == VX_SUCCESS) {

status = vxGraphParameterDequeueDoneRef(obj->graph, obj->graph_parameter_num_mosaic, (vx_reference*)&in_mosaic_frames, 1, &num_refs_capture);

}

#if 0

/* firmware update */

if (status == VX_SUCCESS) {

status = vxGraphParameterDequeueDoneRef(obj->graph, obj->graph_parameter_num_outsaic, (vx_reference*)&out_mosaic_image, 1, &num_refs_capture);

}

#endif

printf("status=%d updateStatus=%d\n", status, updateStatus);

//updateStatus=0;

#if MULTI_CHANNEL_DISPLAY

if ((status == VX_SUCCESS) && (U_DONOTHING == updateStatus))

//if (0)

{

stChannel_prms.active_channel_id = 0;

voSwitch_ch_obj = vxCreateUserDataObject(

obj->context,

"tivx_display_select_channel_params_t",

sizeof(tivx_display_select_channel_params_t),

&stChannel_prms);

status = vxGetStatus((vx_reference) voSwitch_ch_obj);

if (status == VX_SUCCESS)

{

vrRefs[0] = (vx_reference)voSwitch_ch_obj;

vxCopyUserDataObject(

voSwitch_ch_obj,

0,

sizeof(tivx_display_select_channel_params_t),

&stChannel_prms,

VX_WRITE_ONLY,

VX_MEMORY_TYPE_HOST);

status = tivxNodeSendCommand(obj->displayNode, 0, TIVX_DISPLAY_SELECT_CHANNEL, vrRefs, 1u);

APP_PRINTF("\e[1;33m tivxNodeSendCommand(a) : %d \e[0m\n", status);

vxReleaseUserDataObject(&voSwitch_ch_obj);

} else {

APP_PRINTF("\e[1;33m vxCreateUserDataObject returns %d. \e[0m\n", status);

}

}

if ((status == VX_SUCCESS) && (U_DONOTHING != updateStatus))

//if (1)

{

//status = vxGraphParameterDequeueDoneRef(obj->graph, obj->graph_parameter_num_display, (vx_reference*)vxGetObjectArrayItem((vx_object_array)obj->vaOutput_image, 1), 1, &num_refs_capture);

stChannel_prms.active_channel_id = 1;

voSwitch_ch_obj = vxCreateUserDataObject(

obj->context,

"tivx_display_select_channel_params_t",

sizeof(tivx_display_select_channel_params_t),

&stChannel_prms);

status = vxGetStatus((vx_reference) voSwitch_ch_obj);

if (status == VX_SUCCESS)

{

vrRefs[0] = (vx_reference)voSwitch_ch_obj;

vxCopyUserDataObject(

voSwitch_ch_obj,

0,

sizeof(tivx_display_select_channel_params_t),

&stChannel_prms,

VX_WRITE_ONLY,

VX_MEMORY_TYPE_HOST);

status = tivxNodeSendCommand(obj->displayNode, 0, TIVX_DISPLAY_SELECT_CHANNEL, vrRefs, 1u);

APP_PRINTF("\e[1;33m tivxNodeSendCommand(v) : %d \e[0m\n", status);

vxReleaseUserDataObject(&voSwitch_ch_obj);

} else {

APP_PRINTF("\e[1;33m vxCreateUserDataObject returns %d. \e[0m\n", status);

}

}

#endif

#if 1

if ((status == VX_SUCCESS) && (obj->test_mode == 1)) {

status = vxGraphParameterDequeueDoneRef(obj->graph, obj->graph_parameter_num_display, (vx_reference*)&test_image, 1, &num_refs_capture);

}

if ((obj->test_mode == 1) && (i > TEST_BUFFER) && (status == VX_SUCCESS)) {

vx_uint32 actual_checksum = 0;

if (app_test_check_image(test_image, checksums_expected[obj->sensor_sel][0], &actual_checksum) == vx_false_e) {

test_result = vx_false_e;

}

populate_gatherer(obj->sensor_sel, 0, actual_checksum);

}

// if (i % 100 == 0)

// APP_PRINTF(" i %d...\n", i);

if ((status == VX_SUCCESS) && (obj->test_mode == 1)) {

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_display, (vx_reference*)&test_image, 1);

}

#endif

if (status == VX_SUCCESS && obj->yuv_cam_input == vx_false_e) { // RAW format

vx_image dst_img = (vx_image)vxGetObjectArrayItem(in_mosaic_frames, 0);

if (obj->fakeImg == 0) // from camera

nv12_copy_to(out_viss_image, dst_img);

else // from static image

nv12_copy_to(obj->fake_yuv_image, dst_img);

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_mosaic, (vx_reference*)&in_mosaic_frames, 1);

vxReleaseImage(&dst_img);

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_viss, (vx_reference*)&out_viss_image, 1);

}

if (status == VX_SUCCESS && obj->yuv_cam_input == vx_true_e) { // YUV format

vx_image dst_img = (vx_image)vxGetObjectArrayItem(in_mosaic_frames, 0);

vx_image src_img = (vx_image)vxGetObjectArrayItem(out_capture_frames, 0);

vxuColorConvert(obj->context, src_img, dst_img);

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_mosaic, (vx_reference*)&in_mosaic_frames, 1);

vxReleaseImage(&dst_img);

vxReleaseImage(&src_img);

}

#if 0

#if MULTI_CHANNEL_DISPLAY

if ((status == VX_SUCCESS) && (U_DONOTHING != updateStatus))

{

change_status_msg(obj, updateStatus);

vx_image dst_img = (vx_image)vxGetObjectArrayItem((vx_object_array)obj->vaOutput_image, 1);

vxuColorConvert(obj->context, obj->viMsg, dst_img);

//status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_display, (vx_reference*)vxGetObjectArrayItem((vx_object_array)obj->vaOutput_image, 1), 1);

printf("20250227 status=%d\n", status);

vxReleaseImage(&dst_img);

}

#endif

#endif

#if 0

/* firmware update */

if (status == VX_SUCCESS) { /* if do nothing, directly set orig to dest */

//vx_image dst_img = out_mosaic_image;

//vx_image src_img = out_mosaic_image;

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_outsaic, (vx_reference*)&out_mosaic_image, 1);

//vxReleaseImage(&dst_img);

//vxReleaseImage(&src_img);

}

#endif

if (status == VX_SUCCESS) {

status = vxGraphParameterEnqueueReadyRef(obj->graph, obj->graph_parameter_num_capt, (vx_reference*)&out_capture_frames, 1);

}

graph_parameter_num++;

// for detect camera plug or not

if (cameraDetect>0 && (cameraDetect % 60 ==0)) {

#if 0

if (TTE_detectSensor(obj->context,obj->capture_node,&(obj->ch_mask),chmask) != chmask)

{

if (appCheckCameraPlug( obj->ch_mask) == 0)

{

status = app_init_sensor_plug(obj, "sensor_obj");

if (status == VX_SUCCESS)

appStartImageSensor(obj->sensor_name,obj->ch_mask);

else

printf("Config failed\n");

}

}

#endif

}

cameraDetect++;

appPerfPointEnd(&obj->total_perf);

if ((obj->stop_task) || (status != VX_SUCCESS)) {

break;

}

}

if (status == VX_SUCCESS) {

status = vxWaitGraph(obj->graph);

}

/* Dequeue buf for pipe down */

#if 0

for (buf_id=0; buf_id<obj->num_cap_buf-2; buf_id++) {

APP_PRINTF(" Dequeuing capture # %d...\n", buf_id);

graph_parameter_num = 0;

vxGraphParameterDequeueDoneRef(obj->graph, graph_parameter_num, (vx_reference*)&out_capture_frames, 1, &num_refs_capture);

graph_parameter_num++;

}

#endif

if (status == VX_SUCCESS) {

status = appStopImageSensor(obj->sensor_name, channel_mask);

}

return status;

}

static void app_run_task(void *app_var) {

AppObj *obj = (AppObj *)app_var;

appPerfStatsCpuLoadResetAll();

app_run_graph(obj);

obj->stop_task_done = 1;

}

static int32_t app_run_task_create(AppObj *obj) {

tivx_task_create_params_t params;

int32_t status;

tivxTaskSetDefaultCreateParams(¶ms);

params.task_main = app_run_task;

params.app_var = obj;

obj->stop_task_done = 0;

obj->stop_task = 0;

status = tivxTaskCreate(&obj->task, ¶ms);

return status;

}

static void app_run_task_delete(AppObj *obj) {

while (obj->stop_task_done==0) {

tivxTaskWaitMsecs(100);

}

tivxTaskDelete(&obj->task);

}

static char menu[] = {

"\n"

"\n =========================="

"\n Demo : Single Camera w/ 2A"

"\n =========================="

"\n"

"\n p: Print performance statistics"

"\n"

"\n s: Save Sensor RAW, VISS Output and H3A output images to File System"

"\n"

"\n e: Export performance statistics"

#ifdef A72

#if defined(LINUX)

"\n"

"\n u: Update DCC from File System"

"\n"

"\n"

#endif

#endif

"\n x: Exit"

"\n"

"\n Enter Choice: "

};

static vx_status app_run_graph_interactive(AppObj *obj) {

vx_status status;

uint32_t done = 0;

char ch;

FILE *fp;

app_perf_point_t *perf_arr[1];

uint8_t channel_mask = (1<<obj->selectedCam);

status = app_run_task_create(obj);

if (status) {

printf("ERROR: Unable to create task\n");

} else {

appPerfStatsResetAll();

while (!done && (status == VX_SUCCESS)) {

printf(menu);

ch = getchar();

printf("\n");

switch(ch) {

case 'p':

appPerfStatsPrintAll();

status = tivx_utils_graph_perf_print(obj->graph);

appPerfPointPrint(&obj->total_perf);

printf("\n");

appPerfPointPrintFPS(&obj->total_perf);

appPerfPointReset(&obj->total_perf);

printf("\n");

break;

case 's':

save_debug_images(obj);

break;

case 'e':

perf_arr[0] = &obj->total_perf;

fp = appPerfStatsExportOpenFile(".", "basic_demos_app_single_cam");

if (NULL != fp)

{

appPerfStatsExportAll(fp, perf_arr, 1);

status = tivx_utils_graph_perf_export(fp, obj->graph);

appPerfStatsExportCloseFile(fp);

appPerfStatsResetAll();

} else {

printf("fp is null\n");

}

break;

#ifdef A72

#if defined(LINUX)

case 'u':

appDccUpdatefromFS(obj->sensor_name, obj->sensor_wdr_mode,

obj->node_aewb, 0,

obj->node_viss, 0,

obj->node_ldc, 0,

obj->context);

break;

#endif

#endif

case 'x':

obj->stop_task = 1;

done = 1;

break;

default:

printf("Unsupported command %c\n", ch);

break;

}

fflush (stdin);

}

app_run_task_delete(obj);

}

if (status == VX_SUCCESS) {

status = appStopImageSensor(obj->sensor_name, channel_mask);

}

return status;

}

static void app_show_usage(int argc, char* argv[]) {

printf("\n");

printf(" Single Camera Demo - (c) Texas Instruments 2019\n");

printf(" ========================================================\n");

printf("\n");

printf(" Usage,\n");

printf(" %s --cfg <config file> --use_csi1 --fake --num_frames_to_run <frames> --showFisheye\n", argv[0]);

printf("\n");

}

#ifdef A72

#if defined(LINUX)

int appSingleCamUpdateVpacDcc(AppObj *obj, uint8_t* dcc_buf, uint32_t dcc_buf_size)

{

int32_t status = 0;

status = appUpdateVpacDcc(dcc_buf, dcc_buf_size, obj->context,

obj->node_viss, 0,

obj->node_aewb, 0,

obj->node_ldc, 0

);

return status;

}

#endif

#endif

static void app_parse_cfg_file(AppObj *obj, char *cfg_file_name) {

FILE *fp = fopen(cfg_file_name, "r");

char line_str[1024];

char *token;

BOOL camera1Inited = FALSE;

BOOL camera2Inited = FALSE;

BOOL camera3Inited = FALSE;

BOOL camera5Inited = FALSE;

int start_x = 0, start_y = 0, end_x = 0, end_y = 0;

if (fp==NULL) {

printf("# ERROR: Unable to open config file [%s]. Switching to interactive mode\n", cfg_file_name);

obj->is_interactive = 1;

} else {

while (fgets(line_str, sizeof(line_str), fp)!=NULL) {

char s[]=" \t";

if (strchr(line_str, '#')) {

continue;

}

/* get the first token */

token = strtok(line_str, s);

if (NULL != token) {

if (!strcmp(token, "sensor_index")) {

token = strtok(NULL, s);

if (NULL != token) {

obj->sensor_sel = atoi(token);

printf("sensor_selection = [%d]\n", obj->sensor_sel);

}

} else if (!strcmp(token, "ldc_enable")) {

token = strtok(NULL, s);

if (NULL != token) {

obj->ldc_enable = atoi(token);

printf("ldc_enable = [%d]\n", obj->ldc_enable);

}

} else if (!strcmp(token, "in_size")) {

token = strtok(NULL, s);

if (NULL != token) {

obj->width_in = atoi(token);

printf("width_in = [%d]\n", obj->width_in);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

obj->height_in = atoi(token);

printf("height_in = [%d]\n", obj->height_in);

}

}

} else if (!strcmp(token, "out_size")) {

token = strtok(NULL, s);

if (NULL != token) {

obj->width_out = atoi(token);

printf("width_out = [%d]\n", obj->width_out);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

obj->height_out = atoi(token);

printf("height_out = [%d]\n", obj->height_out);

}

}

} else if (!strcmp(token, "use_csi1")) {

token = strtok(NULL, s);

if (NULL != token) {

obj->use_csi1 = atoi(token);

printf("use_csi1 = [%d]\n", obj->use_csi1);

}

} else if (!strcmp(token, "num_frames_to_run")) {

token = strtok(NULL, s);

if (NULL != token) {

obj->num_frames_to_run = atoi(token);

printf("num_frames_to_run = [%d]\n", obj->num_frames_to_run);

}

} else if (!strcmp(token, "is_interactive")) {

token = strtok(NULL, s);

if (NULL != token) {

obj->is_interactive = atoi(token);

printf("is_interactive = [%d]\n", obj->is_interactive);

}

} else if (!strcmp(token, "host_location")) {

token = strtok(NULL, s);

if (NULL != token) {

char v = token[0];

if (v == HOST_L || v == HOST_R)

{

obj->host_location = v;

printf("host_location = [%c]\n", obj->host_location);

vx_rectangle_t default_rect = left_camera_default_rect;

if (v == HOST_R)

{

default_rect = right_camera_default_rect;

}

if (!camera1Inited)

obj->camera1rect = default_rect;

if (!camera2Inited)

obj->camera2rect = default_rect;

if (!camera3Inited)

obj->camera3rect = default_rect;

if (!camera5Inited)

obj->camera5rect = default_rect;

}

}

} else if (!strcmp(token, "ROI1")) {

token = strtok(NULL, s);

if (NULL != token) {

start_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

start_y = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_y = atoi(token);

camera1Inited = TRUE;

obj->camera1rect.start_x = start_x;

obj->camera1rect.start_y = start_y;

obj->camera1rect.end_x = end_x;

obj->camera1rect.end_y = end_y;

printf("ROI1: %d %d %d %d\n", start_x, start_y, end_x, end_y);

}

}

}

}

} else if (!strcmp(token, "ROI2")) {

token = strtok(NULL, s);

if (NULL != token) {

start_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

start_y = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_y = atoi(token);

camera2Inited = TRUE;

obj->camera2rect.start_x = start_x;

obj->camera2rect.start_y = start_y;

obj->camera2rect.end_x = end_x;

obj->camera2rect.end_y = end_y;

printf("ROI2: %d %d %d %d\n", start_x, start_y, end_x, end_y);

}

}

}

}

} else if (!strcmp(token, "ROI3")) {

token = strtok(NULL, s);

if (NULL != token) {

start_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

start_y = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_y = atoi(token);

camera3Inited = TRUE;

obj->camera3rect.start_x = start_x;

obj->camera3rect.start_y = start_y;

obj->camera3rect.end_x = end_x;

obj->camera3rect.end_y = end_y;

printf("ROI3: %d %d %d %d\n", start_x, start_y, end_x, end_y);

}

}

}

}

} else if (!strcmp(token, "ROI5")) {

token = strtok(NULL, s);

if (NULL != token) {

start_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

token = strtok(NULL, s);

start_y = atoi(token);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_x = atoi(token);

token = strtok(NULL, s);

if (token != NULL) {

if (token[strlen(token)-1] == '\n')

token[strlen(token)-1]=0;

end_y = atoi(token);

camera5Inited = TRUE;

obj->camera5rect.start_x = start_x;

obj->camera5rect.start_y = start_y;

obj->camera5rect.end_x = end_x;

obj->camera5rect.end_y = end_y;

printf("ROI5: %d %d %d %d\n", start_x, start_y, end_x, end_y);

}

}

}

}

} else {

if (strlen(token) > 2)

APP_PRINTF("Invalid token [%s]\n", token);

}

}

}

fclose(fp);

}

if (obj->width_in < 128)

obj->width_in = 128;

if (obj->height_in < 128)

obj->height_in = 128;

if (obj->width_out < 128)

obj->width_out = 128;

if (obj->height_out < 128)

obj->height_out = 128;

}

vx_status app_parse_cmd_line_args(AppObj *obj, int argc, char *argv[]) {

vx_bool set_test_mode = vx_false_e;

vx_int8 sensor_override = 0xFF;

int i;

app_set_cfg_default(obj);

if (argc == 1) {

app_show_usage(argc, argv);

printf("Defaulting to interactive mode \n");

obj->is_interactive = 1;

return VX_SUCCESS;

}

for (i=0; i<argc; i++) {

if (!strcmp(argv[i], "--cfg")) {

i++;

if (i>=argc) {

app_show_usage(argc, argv);

}

app_parse_cfg_file(obj, argv[i]);

} else if (!strcmp(argv[i], "--help")) {

app_show_usage(argc, argv);

return VX_FAILURE;

} else if (!strcmp(argv[i], "--test")) {

set_test_mode = vx_true_e;

} else if (!strcmp(argv[i], "--sensor")) {

// check to see if there is another argument following --sensor

if (argc > i+1) {

sensor_override = atoi(argv[i+1]);

// increment i again to avoid this arg

i++;

}

} else if (!strcmp(argv[i], "--use_csi1")) {

obj->use_csi1 = 1;

printf("use_csi1 = [%d]\n", obj->use_csi1);

} else if (!strcmp(argv[i], "--fake")) {

obj->fakeImg = 1;

printf("fakeImg = [%d]\n", obj->fakeImg);

} else if (!strcmp(argv[i], "--interactive")) {

obj->is_interactive = 1;

printf("is_interactive = [%d]\n", obj->is_interactive);

} else if (!strcmp(argv[i], "--num_frames_to_run")) {

if (argc > i+1) {

obj->num_frames_to_run = atoi(argv[i+1]);

// increment i again to avoid this arg

i++;

}

printf("num_frames_to_run = [%d]\n", obj->num_frames_to_run);

} else if (!strcmp(argv[i], "--showFisheye")) {

obj->showFisheye = 1;

printf("showFisheye = [%d]\n", obj->showFisheye);

}

}

if (set_test_mode == vx_true_e) {

obj->test_mode = 1;

obj->is_interactive = 0;

obj->num_frames_to_run = NUM_FRAMES;

if (sensor_override != 0xFF) {

obj->sensor_sel = sensor_override;

}

}

if (obj->use_csi1 == 0)

printf("use csi0\n");

else

printf("use csi1\n");

return VX_SUCCESS;

}

AppObj gAppObj;

int app_single_cam_main(int argc, char* argv[]) {

AppObj *obj = &gAppObj;

vx_status status = VX_FAILURE;

status = app_parse_cmd_line_args(obj, argc, argv);

if (VX_SUCCESS == status) {

status = app_init(obj);

if (VX_SUCCESS == status) {

APP_PRINTF("app_init done\n");

/* Not checking status because application may be waiting for

error/test frame */

app_create_graph(obj);

if (VX_SUCCESS == status) {

APP_PRINTF("app_create_graph done\n");

if (obj->is_interactive) {

status = app_run_graph_interactive(obj);

} else {

status = app_run_graph(obj);

}

if (VX_SUCCESS == status) {

APP_PRINTF("app_run_graph done\n");

status = app_delete_graph(obj);

if (VX_SUCCESS == status) {

APP_PRINTF("app_delete_graph done\n");

} else {

printf("Error : app_delete_graph returned 0x%x \n", status);

}

} else {

printf("Error : app_run_graph_xx returned 0x%x \n", status);

}

} else {

printf("Error : app_create_graph returned 0x%x is_interactive =%d \n", status, obj->is_interactive);

}

} else {

printf("Error : app_init returned 0x%x \n", status);

}

status = app_deinit(obj);

if (VX_SUCCESS == status) {

APP_PRINTF("app_deinit done\n");

} else {

printf("Error : app_deinit returned 0x%x \n", status);

}

appDeInitImageSensor(obj->sensor_name);

} else {

printf("Error: app_parse_cmd_line_args returned 0x%x \n", status);

}

if (obj->test_mode == 1) {

if ((test_result == vx_false_e) || (status == VX_FAILURE)) {

printf("\n\nTEST FAILED\n\n");

print_new_checksum_structs();

status = (status == VX_SUCCESS) ? VX_FAILURE : status;

} else {

printf("\n\nTEST PASSED\n\n");

}

}

return status;

}

vx_status app_send_test_frame(vx_node cap_node, tivx_raw_image raw_img)

{

vx_status status = VX_SUCCESS;

status = tivxCaptureRegisterErrorFrame(cap_node, (vx_reference)raw_img);

return status;

}

How to solve it?

BR,

Jeff