Tool/software:

Hi,

I use SDK_10.00.00.08 to do product defect detection based on SK-AM68, Running multiple models with 1920*1080 resolution is ok,running multiple models with 3280*2464 is failed Running a single model is ok。

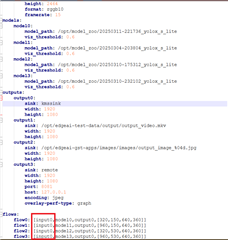

The configuration is as follows,

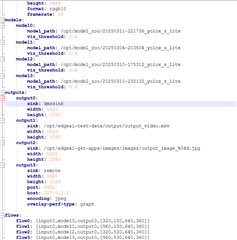

The error message is as follows