Other Parts Discussed in Thread: TDA4VM, ,

Tool/software:

Hello,

We are experiencing very poor detection results with the YOLOX pose model on the SK-AM68 board with Edge AI SDK 9.2, while the precompiled model performs well on a BeagleBoard with TDA4VM using Edge AI 8.2. The issue affects both TI’s precompiled model and self-compiled model (using edgeai-tidl-tools).

Issue Description

- Setup:

- AM68: SK-AM68, Edge AI 9.2, YOLOX pose (TI’s r9.2 modelzoo + self-compiled).

- TDA4VM: BeagleBone AI-64, Edge AI 8.2, YOLOX pose (TI’s r8.2 modelzoo + self-compiled).

- Identical input data.

- Inference using edge-ai-apps / edgeai-gst-apps

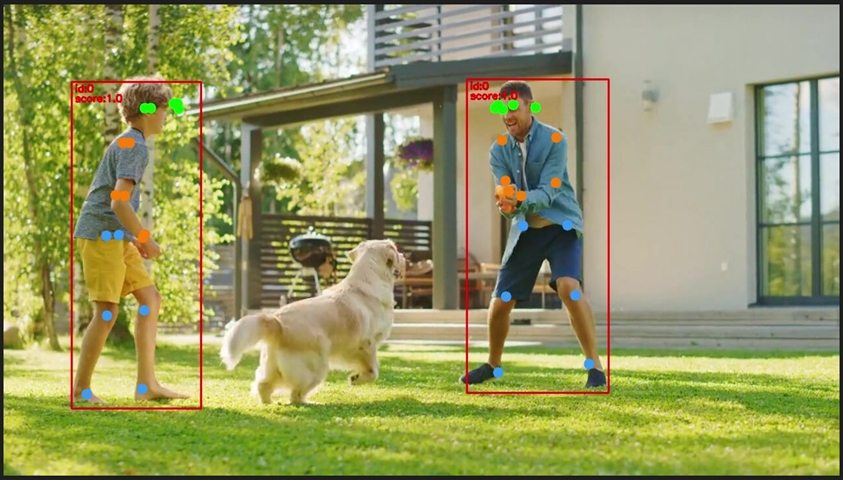

- Problem: On AM68, bboxes are inaccurate, with very low confidence scores, often people are completely undetected. Decresing confidence threshold reults in false positives, also pose estimation is inaccurate. TDA4VM performance is similar to original .pth model detection - see images below.

- Experiments on AM68:

- We tried experimenting with some compilation parameters like high_resolution, tensorbit, quantization_scale_type, and add_data_convert_ops, but detection results remained very poor.

Image comparison:

yolox_s on AM68

yolox_s on TDA4VM

We would very much appreciate your help in resolving this issue.

Regards