Tool/software:

Background: I followed the edgeai-modelmaker instructions step by step, the model can be trained and converted into onnx file then model artifacts were generated successfully. Everything is fine, but when I checked out the compilation run.log, some printouts do not look right to me.

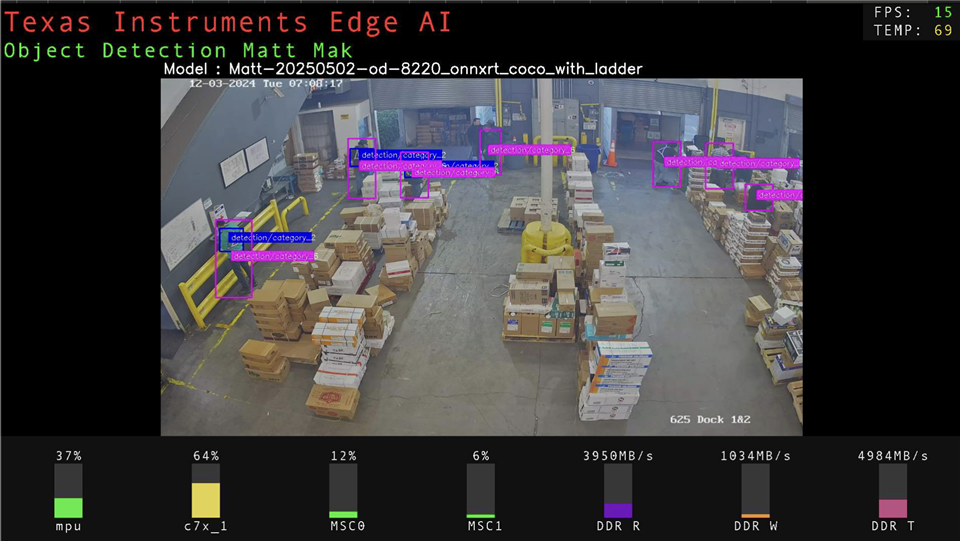

Also, I uploaded the same image into SK-AM62A-LP and did inference, I found out that their performances are different. Inference results of the compiled model that is simulated on the host machine are significantly better than inference results produced by SK-AM62A-LP. I suspect that this is due to this potential compilation issue.

0726.run.log

Hello,

I would like to ask what do compilation run.log line 47 and 48 "Unable to find initializer at index - 1 for node 93

Unable to find initializer at index - 1 for node 109" mean? I also see the same thing in my compilation run.log.

Another question is as you mentioned, after "==================== [Optimization for subgraph_0 Started] ====================", "Invalid Layer Name" is printed repeatedly. I don't understand what it means.

The reason I raise out these two questions is that i found out the inference results of the compiled model artifacts that is simulated on the host machine are significantly better than inference results produced by SK-AM62A-LP with the SAME model artifacts. (please refer to https://e2e.ti.com/support/processors-group/processors/f/processors-forum/1498572/sk-am62a-lp-edgeai-modelmaker-which-shell-python-script-produces-outputs-folder?tisearch=e2e-sitesearch&keymatch=edgeai-tensorlab%20outputs%20folder# for more details). I suspect that the strange output in run.log is related to this issue. Maybe some layers are not valid in the subgraph optimization, thus affect the performance of the model artifact on SK-AM62A-LP.

I am looking forward to hearing from any of you. Thanks.

Regards,

Matt