Tool/software:

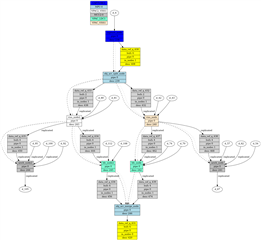

In the multicam codec application with SDK 9.2.0.5

I used a split node to create two LDC nodes in order to apply two different LDC.

After that, I created a merge node to combine the output of each LDC node into a single vx_object_array, which I then sent to the gstreamer pipeline.

However, although the video was saved in the desired way, I encountered an issue where the previous frame was overwritten in units of the encoding buffer size, which is 6 frames.

For example, The frame numbers being saved in the video appear like this : 1,2,3,4,5,6,1,2,3,4,5,6,13,14,15,16,17,18,13,14,15,16,17,18,25,26 ...

The processing in the kernel was created by referring to the split node, as shown in the code below.

Do you have any idea what could be causing this issue?

Thank you

static void swapObjArray(

tivx_obj_desc_object_array_t *in_desc,

tivx_obj_desc_t *in_elem_desc[],

tivx_obj_desc_object_array_t *out_desc,

tivx_obj_desc_t *out_elem_desc[],

uint32_t index

)

{

uint32_t i, j = 0;

for (i = index; i < index + in_desc->num_items; i++)

{

in_desc->obj_desc_id[j] = out_elem_desc[i]->obj_desc_id;

out_desc->obj_desc_id[i] = in_elem_desc[j]->obj_desc_id;

j++;

}

}

.

.

.

tivxGetObjDescList(in_desc->obj_desc_id, (tivx_obj_desc_t**)in_elem_desc, in_desc->num_items);

tivxGetObjDescList(in1_desc->obj_desc_id, (tivx_obj_desc_t**)in1_elem_desc,in1_desc->num_items);

tivxGetObjDescList(out_desc->obj_desc_id, (tivx_obj_desc_t**)out_elem_desc, out_desc->num_items);

swapObjArray(in_desc, in_elem_desc, out_desc, out_elem_desc, index);

index += in_desc->num_items;

swapObjArray(in1_desc, in1_elem_desc, out_desc, out_elem_desc, index);

index += in_desc->num_items;