Other Parts Discussed in Thread: PROCESSOR-SDK-J721E, , TDA4VH, AM69, DRA821

Tool/software:

Hello, TI,

1.In the thread at e2e.ti.com/.../processor-sdk-j721e-pci_epf_test-pci_epf_test-1-failed-to-get-private-dma-rx-channel-falling-back-to-generic-one, you mentioned that the issue “PROCESSOR-SDK-J721E: pci_epf_test pci_epf_test.1: Failed to get private DMA rx channel. Falling back to generic one” would be fixed in the SDK 10 timeframe. Has this issue been resolved in the current SDK 10 release?

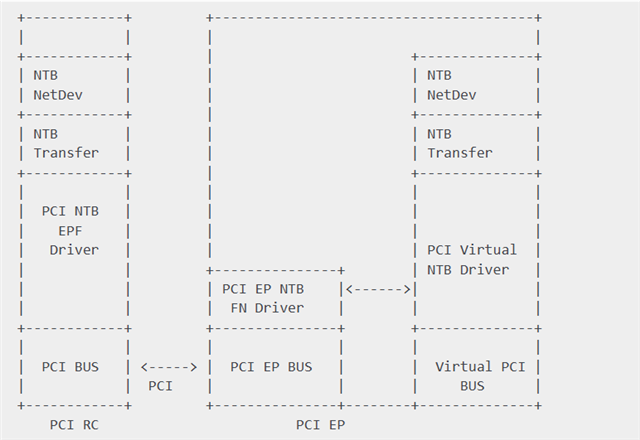

2. We are using RK3588 (RC) and TDA4 (EP) for PCIe communication.

-

- Without DMA, directly using

ioread32_rep/memcpy_fromioin the RC-side driver can correctly read data from the EP’s BAR Space. - When invoking DMA in the RC-side PCIe device driver to read data from the EP’s BAR Space, an error occurs: only the first 4 bytes are correct, and the subsequent data is incorrect.

- With assistance from RK FAE, debug logs show that the TDA4 returns an “Unsupported Request completion” error to the RK3588.

- They suggested checking the inbound configuration of the EP’s BAR space.

- Using similar code in a scenario where two RK3588s are interconnected via PCIe (RC

EP), controlling the RC-side PCIe DMA enables successful data communication with the EP.

- Without DMA, directly using

Question: What could be the cause of the error in case 2.b, and how can it be resolved?

3. We have identified specific operations for the ATU inbound registers of the PCIe EP. Are all the inbound configuration operations here?

pci_epf_test_set_bar(struct pci_epf *epf) -->

pci_epc_set_bar(struct pci_epc *epc, u8 func_no, struct pci_epf_bar *epf_bar) -->

//pcie-cadence-ep.c

static int cdns_pcie_ep_set_bar(struct pci_epc *epc, u8 fn,

struct pci_epf_bar *epf_bar)

{

....

dma_addr_t bar_phys = epf_bar->phys_addr;

....

addr0 = lower_32_bits(bar_phys);

addr1 = upper_32_bits(bar_phys);

....

cdns_pcie_writel(pcie, CDNS_PCIE_AT_IB_EP_FUNC_BAR_ADDR0(fn, bar),

addr0);

cdns_pcie_writel(pcie, CDNS_PCIE_AT_IB_EP_FUNC_BAR_ADDR1(fn, bar),

addr1);

...

}