Tool/software:

I'd like to decode the "progressive" video stream from the ti,j721e-csi2rx driver.

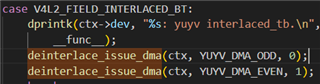

The isl7998x reports the flags V4L2_FIELD_SEQ_BT and V4L2_FIELD_SEQ_TB, however the TI CSI driver overwrites them to progressive (V4L2_FIELD_NONE)

This causes the image to have two copies instead of being deinterlaced.

I'd like help doing either:

Setting up an m2m-deinterlace device to decode the video stream.

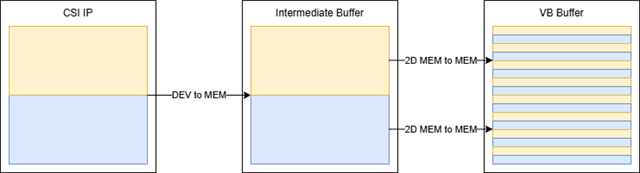

Patch ti,j721e-csi2rx, potentially ti_csi2rx_start_dma to issue two small interleaved DMAs (similar to m2m-deinterlace) when these flags are set (updating TI's driver to support these two specific field orders)