Tool/software:

Hello,

I'm trying to initiate transmission of several ethernet frames from upper layers.

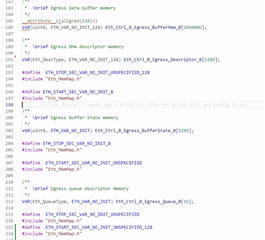

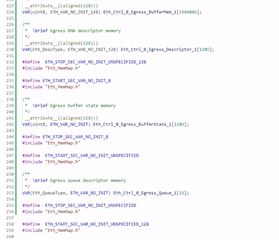

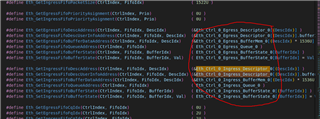

When a buffer is requested by the upper layer, Eth_Queue_remove() is called, which sets Eth_CfgPtr -> dmaCfgPtr -> egressFifoCfgPtr -> quePtr-> head/tail pointers to NULL.

On the next buffer request, since these pointers are already NULL, the frame will not be added in the queue and Eth driver will report BUFFER_BUSY to upper layer.

Those pointers are initialized in Eth_Queue_add(), which is called by Eth_TxConfirmation().

Initially I had only one Fifo configured in Eth driver. I've tried to add few more but with no result.

Could you give me some hints on how I could approach this issue ?

Regards,

Octavian