Other Parts Discussed in Thread: SK-AM62-LP

Tool/software:

Hi Ti Team

I am trying to run the IPC RP Message Linux Echo demo application to test the RPmsg and LPM feature on AM62x-SK-Lp board.

I am following the steps mentioned in this link

Steps followed:

1.loaded the R5F image during tispl.bin

2. let the linux up and running

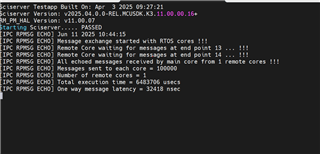

3. Enter these command and I can see some logs on Linux terminal

But as mentioned in the link, I don't see the expected output on the WakeUp UART. Also, nothing happens when I type on the UART terminal.

Did I miss something and make a mistake?

Regards

Mayank