Tool/software:

Hi, I have a question. When I compile my model with edgeai-tidl-tools version 11_00_06_00 and set tensor_bits = 8, the compiled model works fine (though the accuracy is too low). But when I change tensor_bits = 16, the compiled model becomes completely unusable.

I also tried adding some layers to output_feature_16bit_names_list, but as long as I add anything there, the compiled model cannot be used at all. Do you know why this happens?

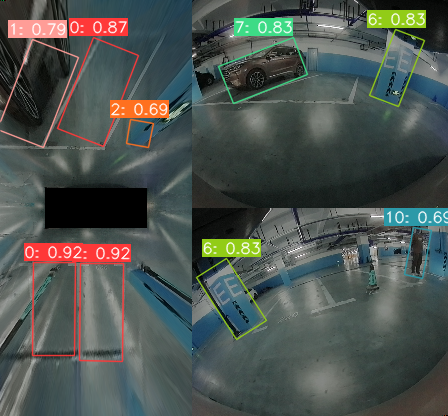

tensor_bits = 8:

tensor_bits = 16: