Other Parts Discussed in Thread: SYSCONFIG

Dear TI Team,

I encountered issues while testing the overhead from triggering a timer interrupt to entering it, and I would like to seek your advice.

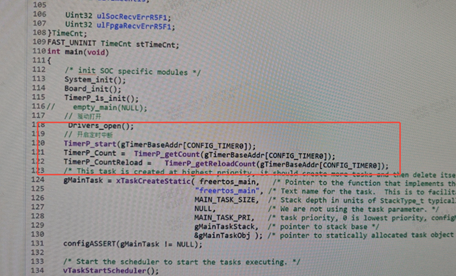

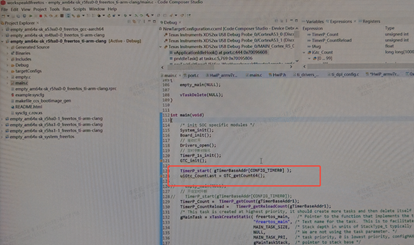

First, in an empty project, I configured a timer via SysConfig with the following parameters: clock frequency of 25 MHz, interrupt priority of 0, and a 1 ms period. When entering the interrupt callback function, I used TimerP_getCount to read the count value, and calculated the interrupt entry overhead based on this count. During the test, the following phenomena occurred:

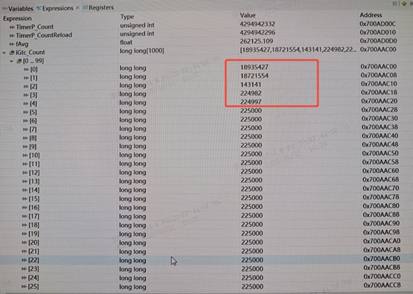

1.In a53ss0-0_nortos, r5fss0-0_freertos, and a53ss0-0_freertos, the interrupt entry overhead was significantly longer for the first few times, reaching a maximum of over 700 microsecond .

2.No anomalies were found in r5fss0-0_nortos the overhead was around 0.48 microsecond , but with a fluctuation of 0.15 microsecond (maximum: 0.56 microsecond ; minimum: 0.4 microsecond ). Similar fluctuations were observed on other cores, roughly around 0.3 microsecond . When FreeRTOS is used, the fluctuation becomes more severe (up to 2 microsecond ) when two tasks are switching.

3.A fast SRAM region was defined for the A-core, and a fast TCMB0 region was defined for the R-core. However, the interrupt entry overhead in the fast regions was not lower than that in the slow regions.

Questions:

Q1: Why is the interrupt entry latency so long, and how should I resolve this issue?

Q2: Why do these fluctuations occur, and are they normal?

Q3: Why are the fast regions not faster ?

Thank you!