Hello everyone I am currently monitoring the effect of a GPIO interrupt latency with a scope by raising the XF pin in the interrupt routine.

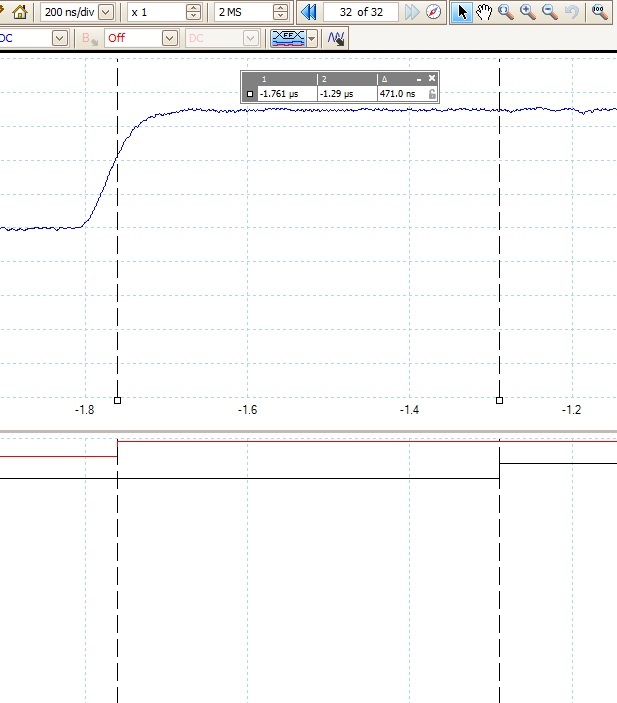

So far I have measured around 600 ns from the raising edge of the input signal onto the GPIO and to the first line of code inside the routine.

I am aware of the automatic context savings but I can't think of any solution to lower this latency.

For what I know, jumping to the routine via vectors shouldn't take more than few cycles (around 50 ns I guess), saving context shouldn't take more than 20 cycles(only few registers to save). So I was aiming at something at around 100 ns to get to the first line of my routine.

Have you got any idea of how I can lower this latency?

Many thanks

Silvere