Hello,

we have an design with a DM368 in an HD IP camera.

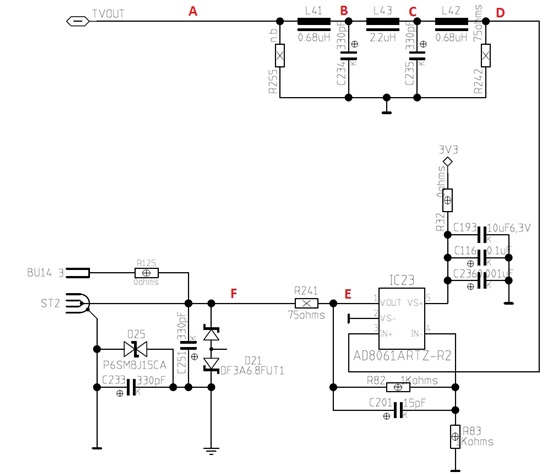

For camera setup support and compatibility reasons to old devices, weg have included an CVBS analog output.

The problem now is, that we must provide correct NTSC and PAL output (switchable via our software) capability and our customer comes from the good old analog video technology with high-end quality.

So he complained that the burst amplitude in PAL is not correct and therefore also the colors and the phase (vectorscope).

When I measure this, I can see that the signal and sync level between PAL and NTSC is outputted correctly, but the burst is only around 240-250mVpp in PAL.

In NTSC it is correct approx. around 286mVpp.

VENC.CVBS is 0x23 in PAL and 0x00 in NTSC

VENC.VMOD is 0x43 in PAL and 0x03 in NTSC

The register which sets the burst amplitude ist VENC.VMOD.TVTYP, when I change this single 2-bits between 00 (=NTSC) and 01 (=PAL) then the burst amplitude changes between the correct NTSC and the wrong PAL amplitude.

How can I achieve the right analog value in PAL+NTSC?

I did not found any register where I can change this.

Is this a bug from the DM368?

Thanks for any hint,

Erich Voko