hi, there are two problem confused me.

1. I want to add myself codec(AVS) into the mcsdk video framework, so I reference this web(http://processors.wiki.ti.com/index.php/MCSDK_VIDEO_2.1_CODEC_TEST_FW_User_Guide). There are some problem I don't know how to complete it.

just like the blow:

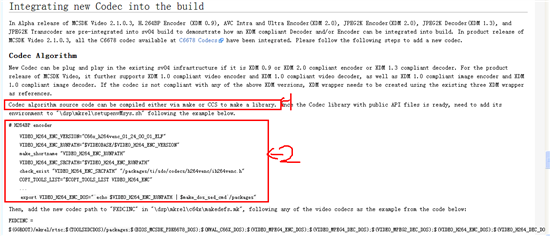

the red "1", I don't know how add my codec code to the existing code, and get the Codec library with public API files.

the red"2", I don't know what the meaning of right hand side.

can you explain to me and told me how can use "make or CCS to make a library"?

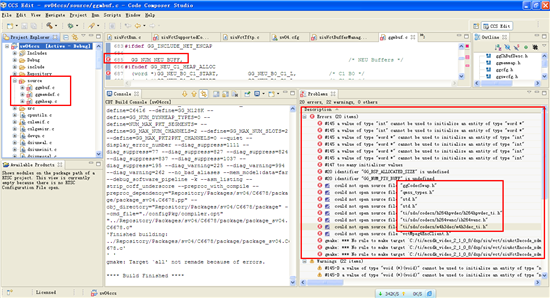

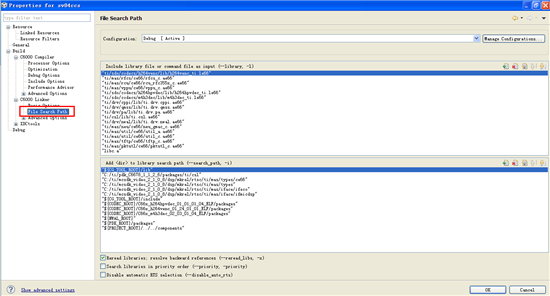

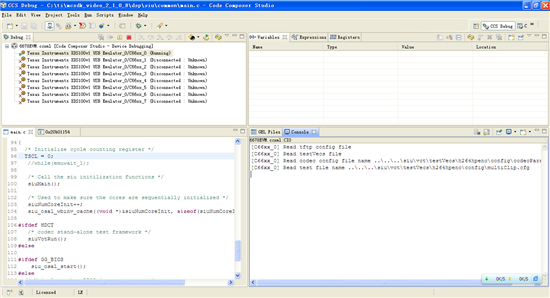

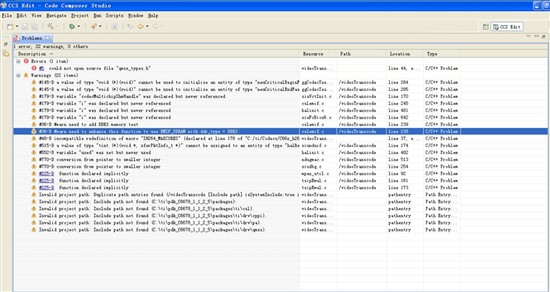

2. I also reference this web(http://e2e.ti.com/support/dsp/c6000_multi-core_dsps/f/639/t/187260.aspx?pi70912=1), Iwant to EVM6678L do a single channel with AVS encoder and output bitstream via RTP/RTCP. Finally, my goal is AVS encode 1080p at 25fps. I intend to use 8 cores to encoder. first of all, I want to encode CIF in real-time. now, i want to build a project not to use minGw,so I download the 1881.sv04.zip from the above web.but after adding the "build variable" i compile the error. like blow:

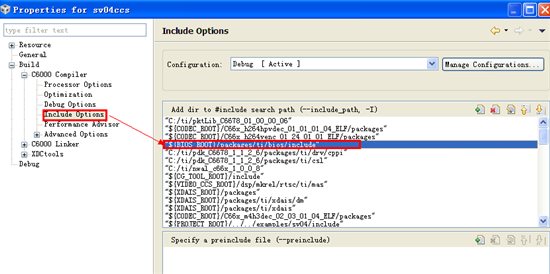

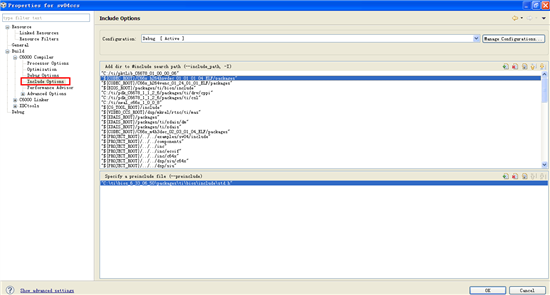

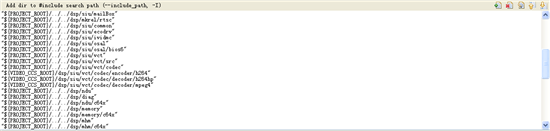

example, the "std.h" i have add the path in the "include option",but it's still error. shown as:

I used ccs 5.2, I have modify the path you have said in the "readme.txt". also i have modify the source code file, example: #include <#include "ti/csl/csl_cacheAux.h" to #include <C:/ti/pdk_C6678_1_1_2_6/packages/ti/csl/csl_cacheAux.h>

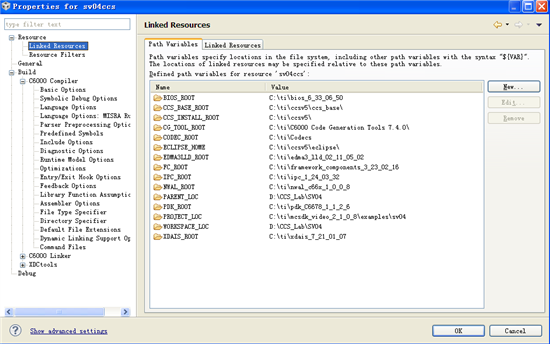

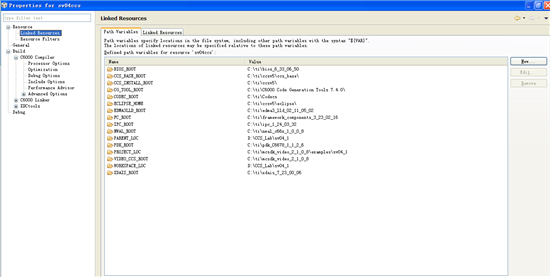

so i add the variable in the "linked resource":

3. if i want to recreate this project what should i do?

help me,

thank you,

lei