In my application, a device writes the the C6678 PCIe inbound memory. This inbound memory is configured by software to be located in the MSMCSRAM.

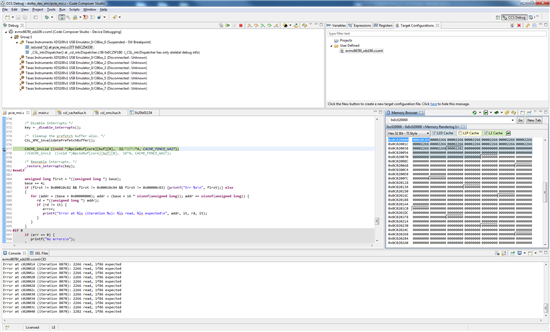

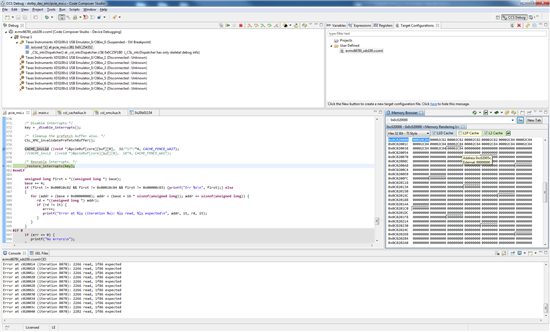

There are 2 buffers in MSMCSRAM. In my test, the device writes alternate between buffers. So if the device writes a value of n to buffer 0 on Dolby frame n, it will write a value of (n+1) to buffer 1 on Dolby frame (n+1), a value of (n+2) to buffer 0 on Dolby frame (n+2), and so on. In my test app, C6678 software will read from both buffers and report when it finds a value of n in 1 of the buffers. It only finds value n when I put a printf() near the memory read. It never finds value n if the printf() is commented out.

Also, from the CCS debugger, I have noticed that the memory browser reports increasing values when the "Go" button is clicked only when the "L1D", "L1P", and "L2" boxes are NOT checked.

I strongly suspect that the cache is the problem.

How do I disable caching of the MSMCSRAM? How do I invalidate the cache before reading from MSMCSRAM?