hello:

I am using c6678, my app runs in it, recently I my L2 cache data block coherence have some problem. Core 0 provide the data that core1~core7 use to process,and core1~core7 return the results to core0.My data in DDR,and core 1~7 shared code in MSMS RAM,core0 code in DDR, private data eg. stack is in LL2. when core0 get the results for slave core, it will write the data into file A, then I will compre the file A from c6678 with a correct result file B which is generated by X86 PC.

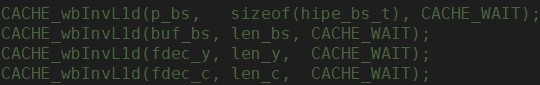

If I set L2 cache 0KB, just use L1, when core1~7 process over one frame, I do the operations below:

the DSP result is the same with PC.

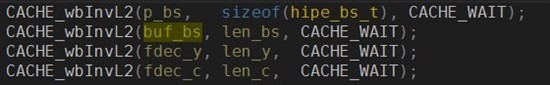

Then I set L2 cache szie 64KB, and when core1~7 process over one frame, I do the operations below:

the result from DSP is not the same with PC, if I do L2 global operation rather than block operation, the result is

the same with PC.

I also follow the advice from Silicon Errata to do the asm(" DINT"); before cache operation, and afer do the

asm(" nop 8");

asm(" nop 8");

asm(" RINT");

it seems dose not work too.

So I am not sure where my problem is! I need some one help me! I was plagued by this problem deeply!

Thanks

Best Regards,

Si.