Hello!

Some time ago there was similar thread about PCIe outbound size in C6678. Though the thread was marked as resolved, I don't see how exactly that helped. Now its my turn to ask exactly same.

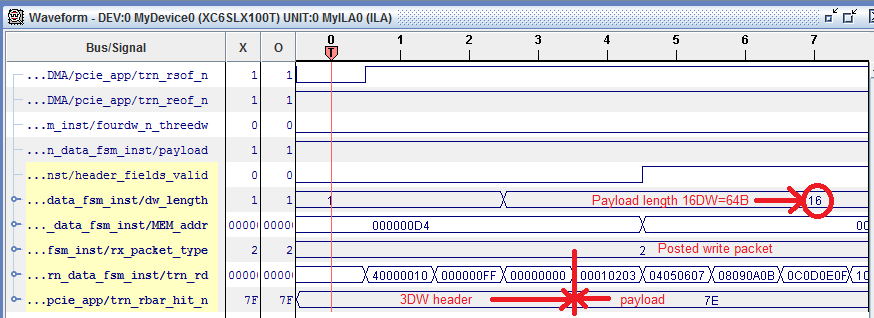

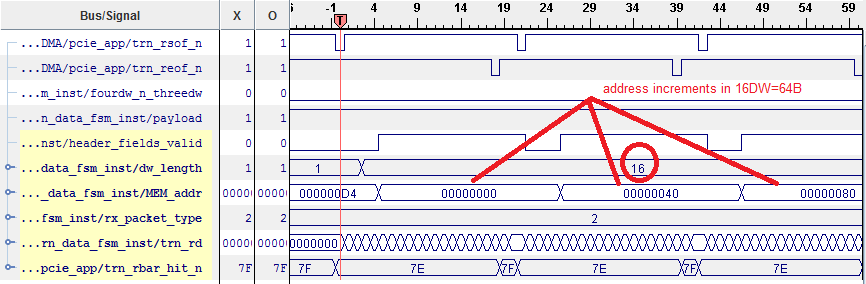

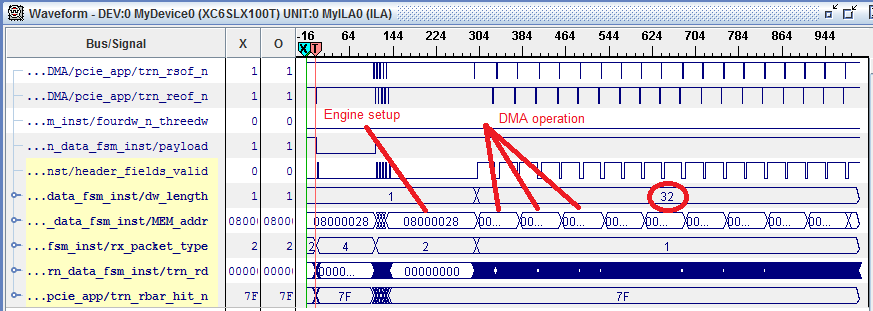

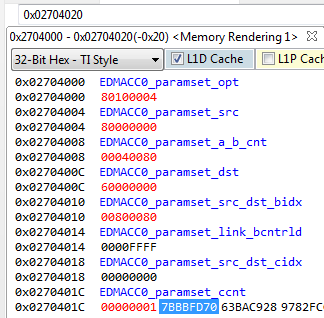

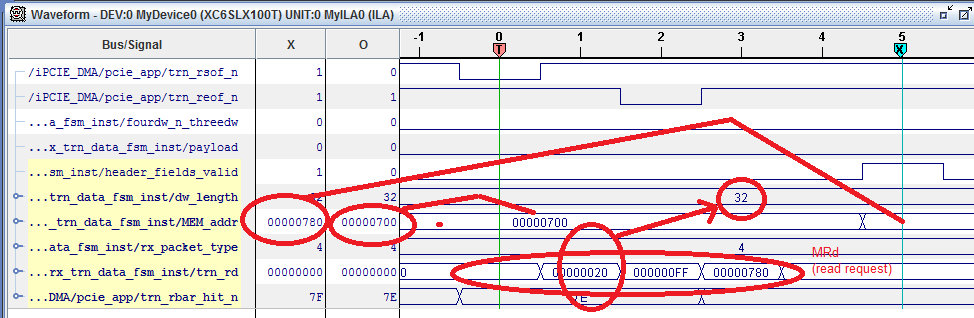

I have C6670. I am setting EDMA transfer through EDMACC0, whose both transfer controllers have DBS of 128B. I am setting transfer size of 2K. I am monitoring PCIe transaction interface on EP, which is FPGA. I see TLP come with payload size of 16 DWORDs, that is 64B. Also I see 32 transfers, thus 2048B/32=64B per transfer. Moreover, in TLP header I see destination address as 0x0000, 0x0040, 0x0080, they increments in multiples of 64B.

On the other hand, I am sure my FPGA advertises DEV_CAP_MAX_PAYLOAD_SUPPORTED parameter as 128B.

Thus once again I'd like to ask, how do I enforce 128B outbound transfer using EDMA over PCIe.

Thanks in advance.